My design motivation for this project came from a prayer. I asked God, “If You can change everyone’s mind, why do You want us to preach?” Then I felt God answered, “Because preaching benefits both the ones you preach to and yourself.”

The next day, while I was reading a news article about the effectiveness of AI-based tutoring, the prayer popped up in my mind. What if, students can learn to provide personalized instruction for each other with the help of AI?

In this way, they will not only learn about the knowledge, but also learn about how to build each other up. With Dr. Ellis as my mentor, I started to design a platform that integrates the merits of AI-based tutoring and collaborative learning.

Literature Review

I first began an unstructured literature review in the fields of “AI-based/Intelligent Tutoring System(ITS)” and “Collaborative Learning System(CLS)”, skimming over 50 publications and taking detailed notes of 17 articles.

In week 1, I worked to understand the general frameworks of ITS and CLS, learning about how the focuses in these two fields have shifted in the past decades. In week 2, I developed a more in-depth understanding of the Student Module and the pedagogical methods being used in ITS, as well as overviewing some previous attempts at integrating ITS and CLS. As I tried to narrow down my focus, I was enlightened by the concept of “meta-cognitive skills.”

I. Meta-cognitive Skills

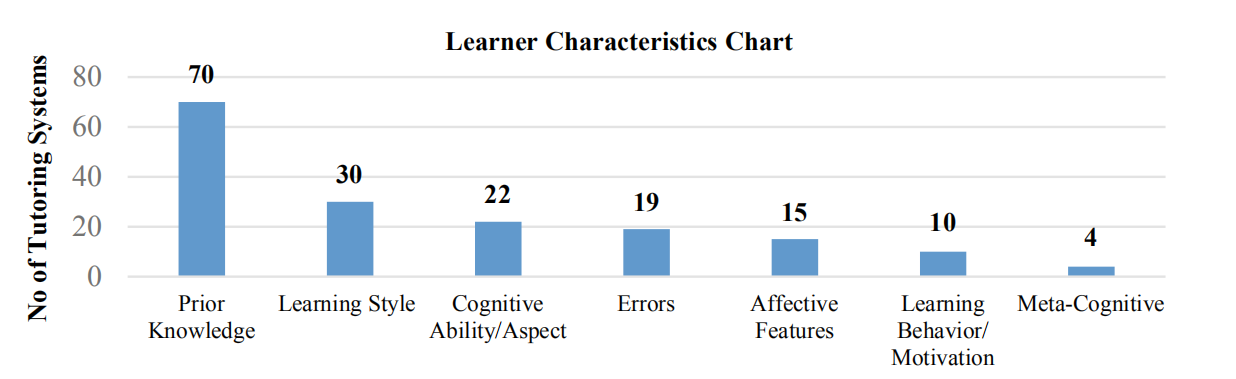

Conati referred to meta-cognitive skills as “domain-independent abilities that are an important aspect of

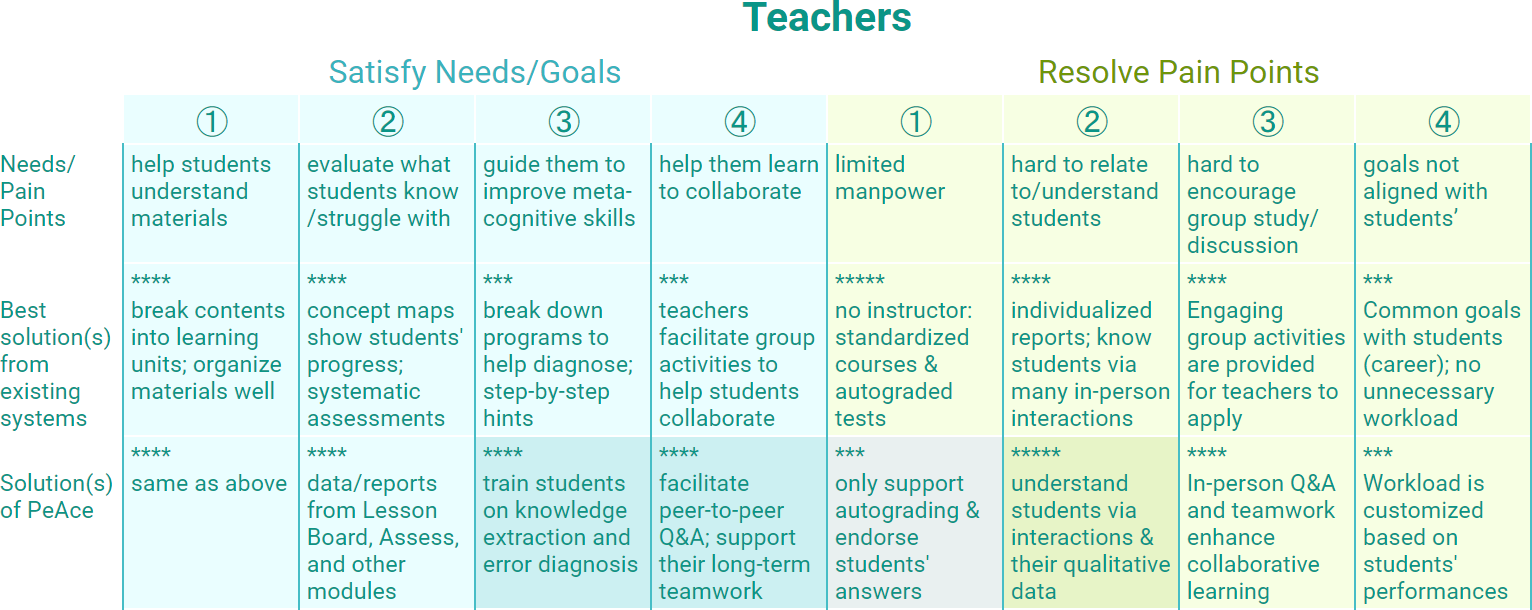

knowing how to learn in general” and proposed them as the “new challenges and directions” for ITS in 2009 [1]. In the figure below, Kumar and Bharti summarized the relative frequency of each learner characteristic being addressed in ITSs across publications from 2002 to 2017, showing that

meta-cognitive skills were least frequently addressed when compared with other major factors [2].

Reproduced from figure 2 from [2]

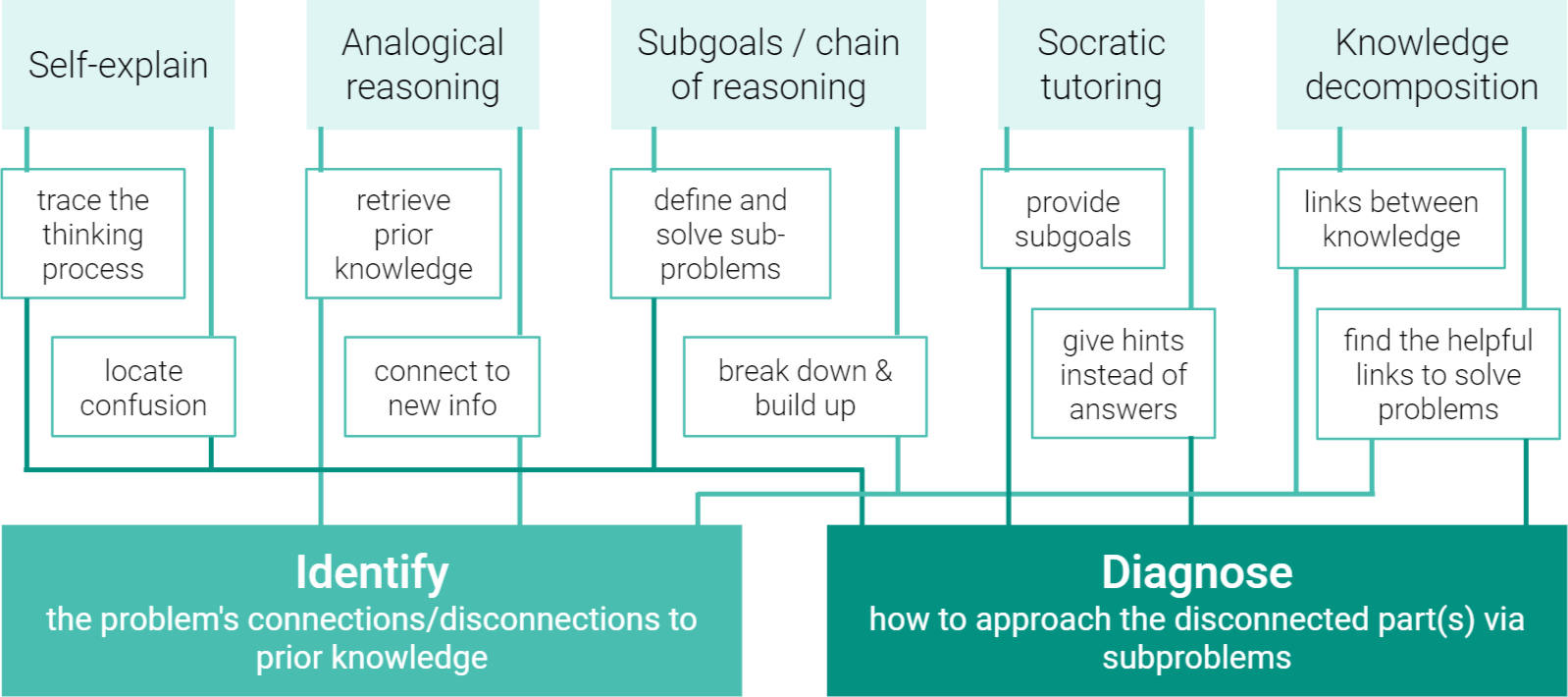

Conati experimented on two aspects of meta-cognitive skills: self-explaining (allowing students to explain their thinking process) and analogical reasoning (learning from examples and being able to work on variations) [1]. These two aspects echo with the methodologies I read from other publications, including Heffernan‘s attempt to help students break down the problem into subgoals and develop a chain of reasoning [3], the socratic tutoring software “Sask” that provides hints instead of direct answers [4], and the knowledge decomposition of Squirrel AI which links concepts with a multi-layer net [5].

The figure below presents how I converged these five concepts based on their connections.

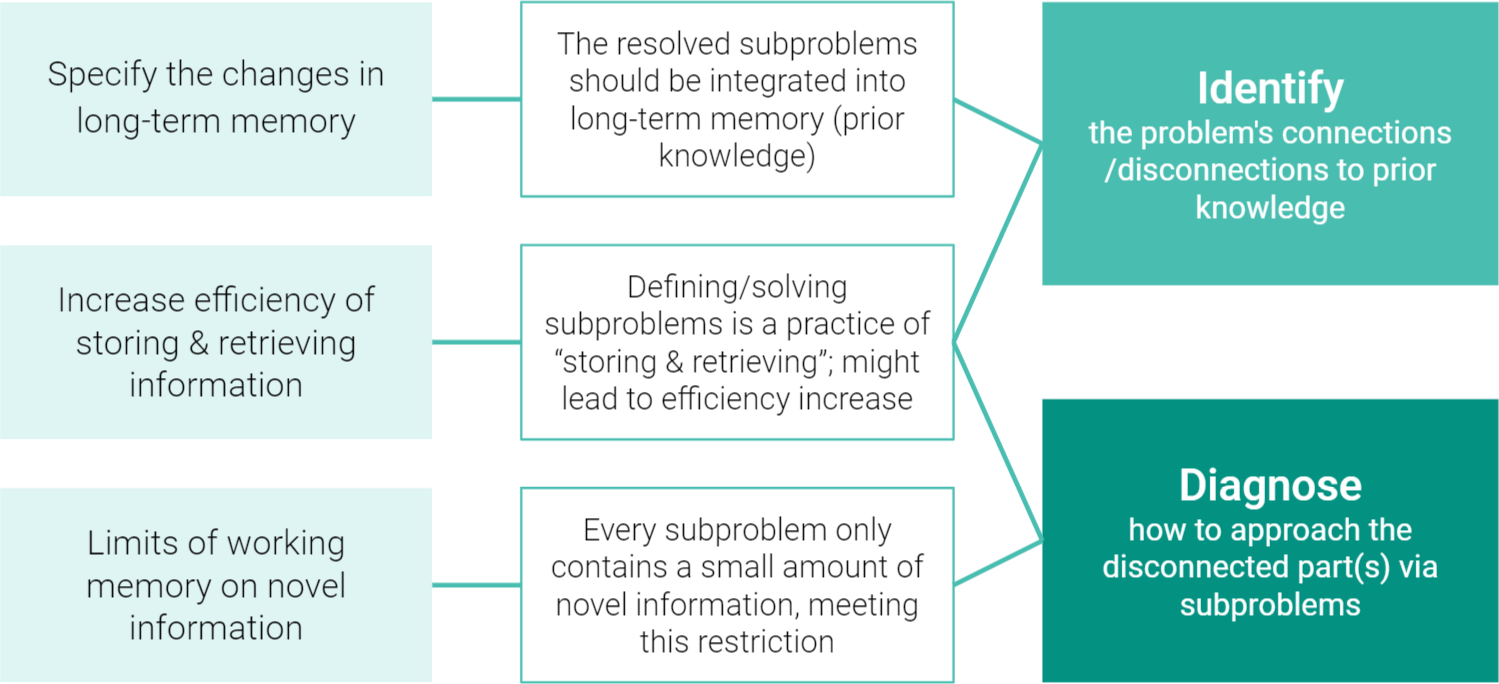

Besides learning from the ITS/Education field, I also took into account the cognitive perspective of learning. Kirschner, Sweller, and Clark stated that instruction should i) specify what has been changed in long-term memory ii) increase the efficiency of storing or retrieving information from long-term memory, and iii) acknowledge the limits of working memory when dealing with novel information [6].

This statement aligns with the two core considerations I summarized above.

ITS/CLS should provide sufficient guidance to help students go through the process of “understanding the connections” and “diagnosing specific difficulties,” which I concluded as the core meta-cognitive skills for learning. Then, an ITS/CLS should pass these skills to students, rather than facilitate the whole process.

Only after students learn to conduct this process independently will their meta-cognitive skills improve.

II. How Interactions Help

I carried out further investigation into research that implemented peer-to-machine or peer-to-peer interactions in students’ learning processes. Leelawong and Biswas established a system where students learned by teaching an artificial agent, and they found that these students learned and gained meta-cognitive skills better than the group that was taught but didn’t teach [7]. Herman and Azad found that compared with giving ordinary lectures,

i) Peer instruction significantly decreased students’ average time spent on the course and their stress, ii) Collaborative problem solving significantly decreased students’ perceived difficulty of the course, and iii) Both conditions significantly increased students’ sense of belonging [8].

What might be the contributing factors to these benefits? Tullis and Goldstone stated that

peer instruction “facilitates the meta-cognitive processes of detecting errors and assessing the coherence of an answer” [9]. In my view, peer instruction is a segment of collaborative problem solving, which involves more forms of communications, social skills, and allocation of tasks; collaborative problem solving (CPS) retains the cognitive benefits of peer instruction, but it requires more effort. To better understand it, I turned to

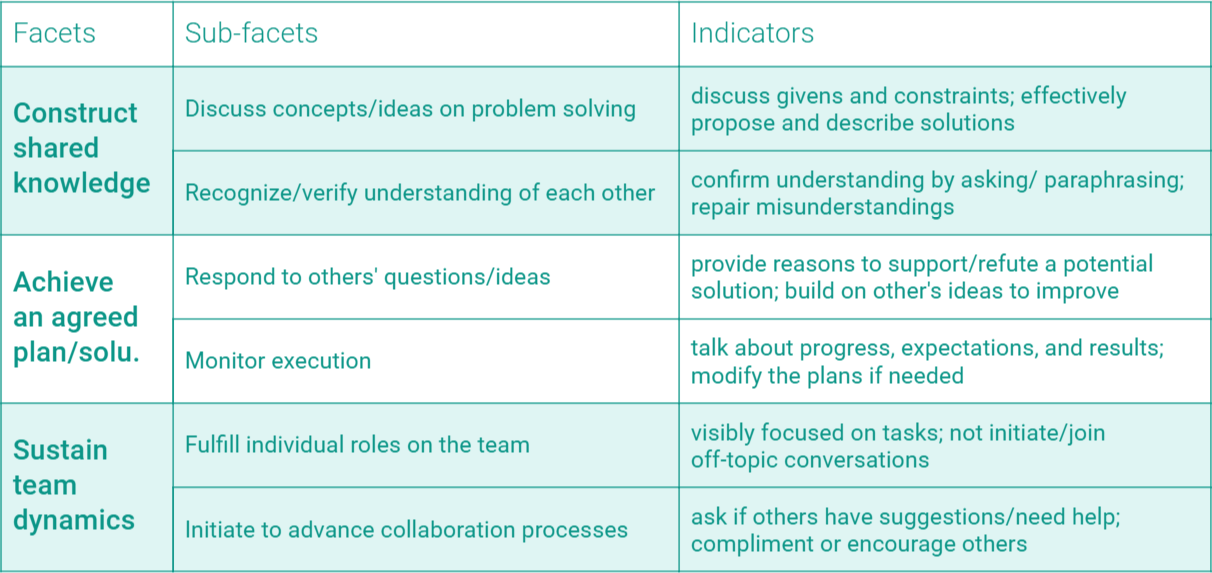

a generalized CPS model proposed by Sun et al., as summarized below [10].

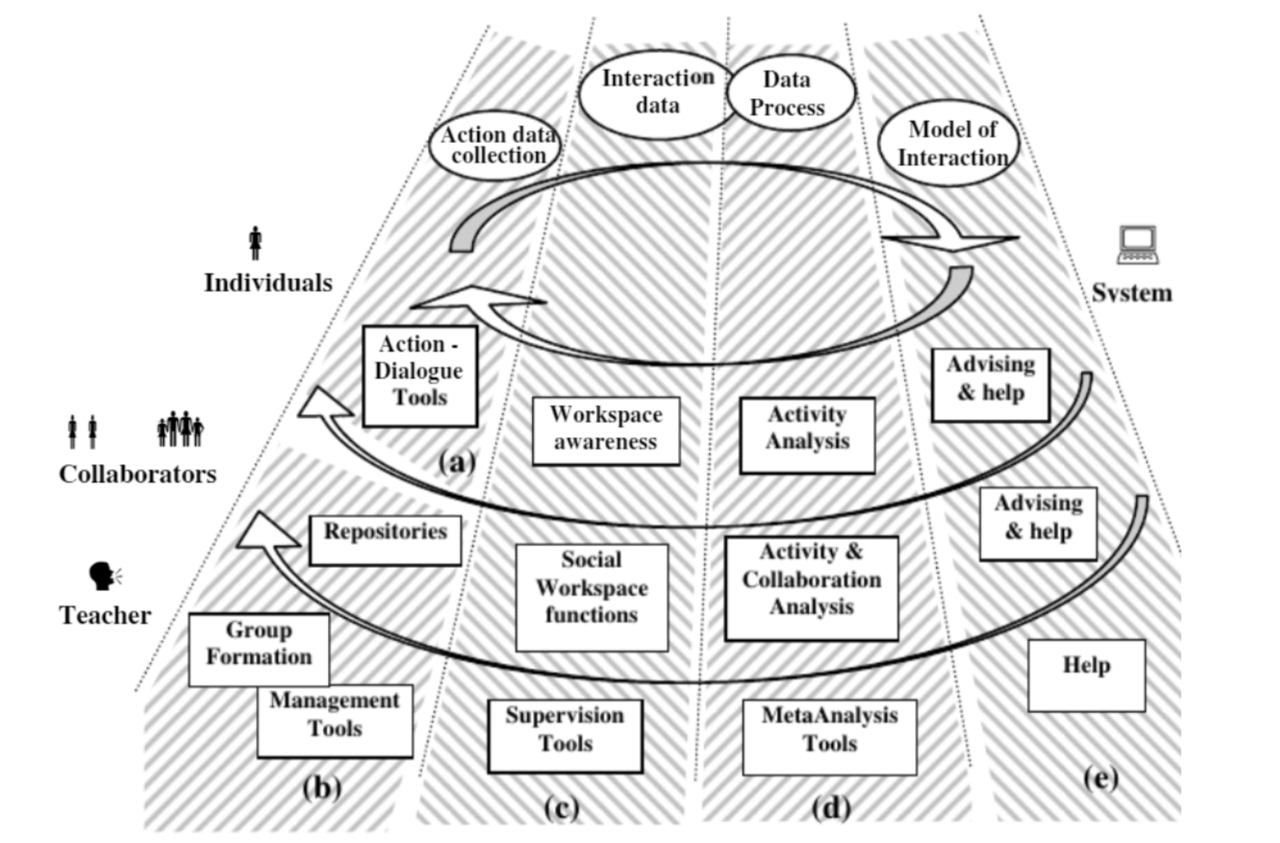

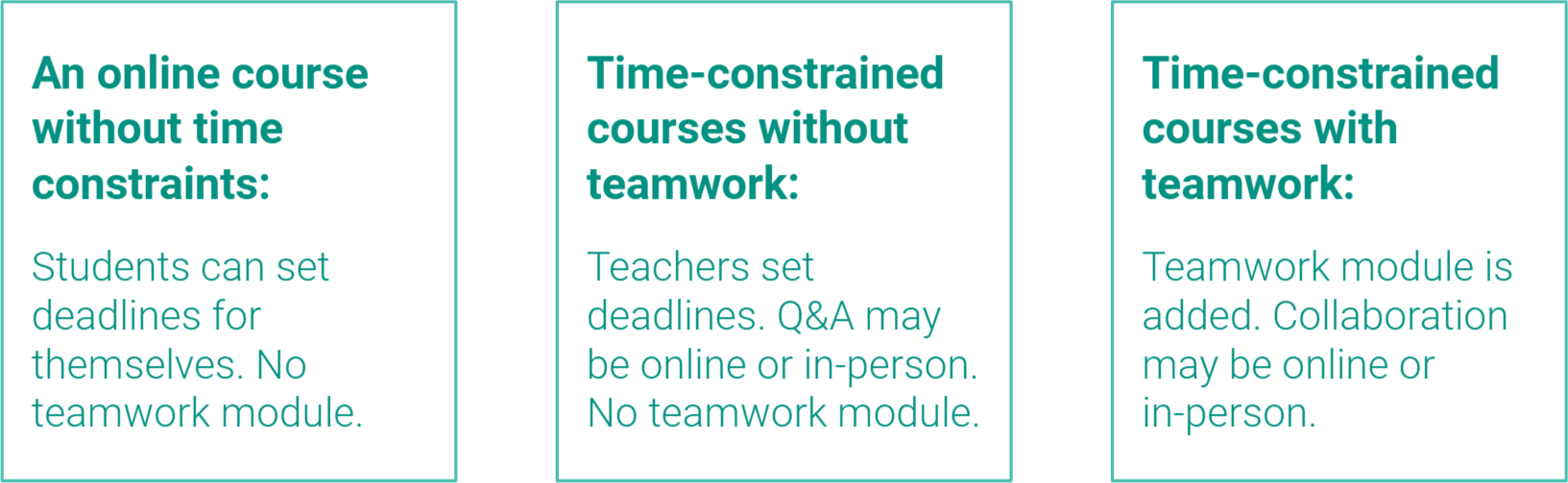

This collaborative problem solving (CPS) process occurs in a bigger system that involves multiple agents, as illustrated by Dimitracopoulou in the figure below [11]. In my view, CPS mainly takes place between collaborators; however,

the success on each (sub-)facet of CPS is heavily impacted by other components of the system below, including individuals’ mental states, teachers’ evaluations of students, and the interactions between different agents.

Reproduced from figure 1 from [11]

This systematic framework inspired me to design a system, instead of merely a CPS process.

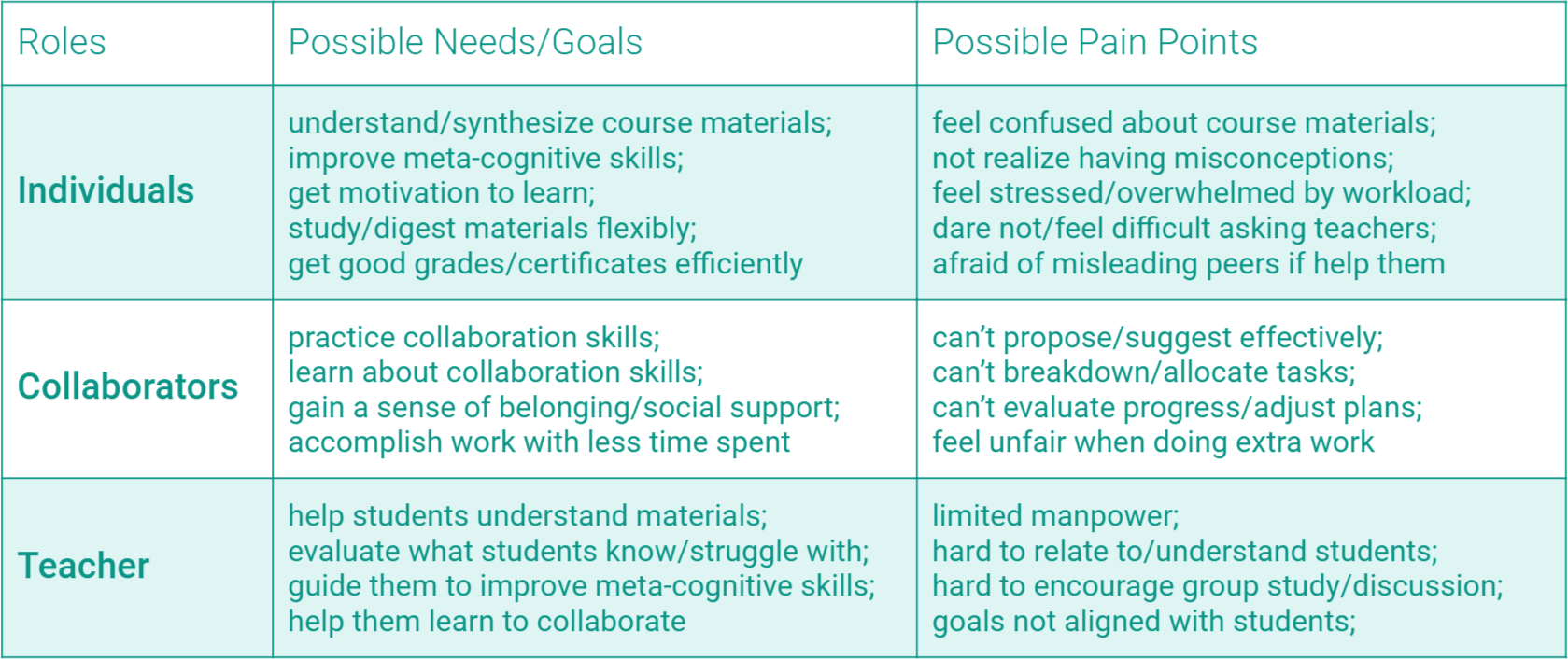

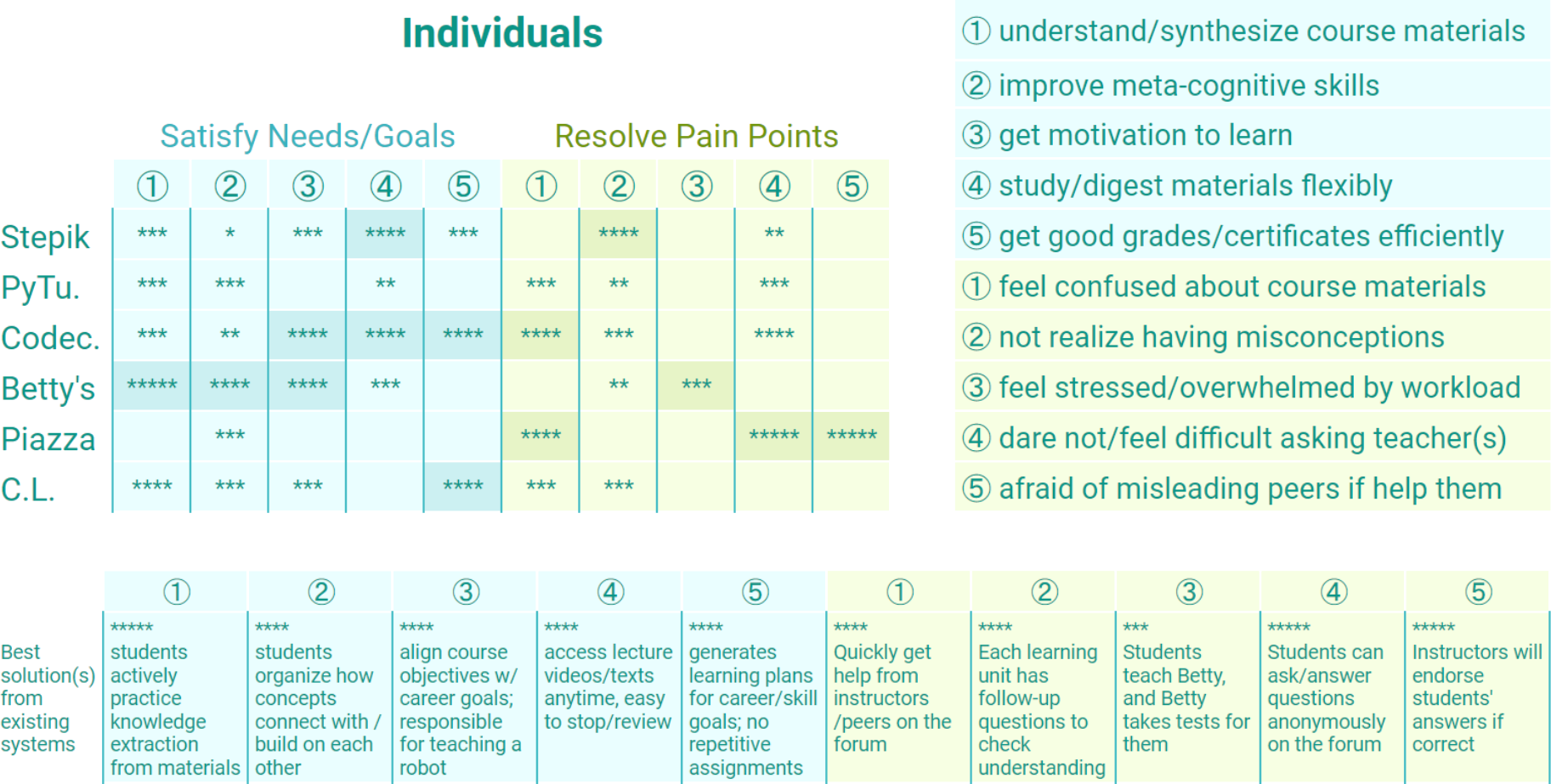

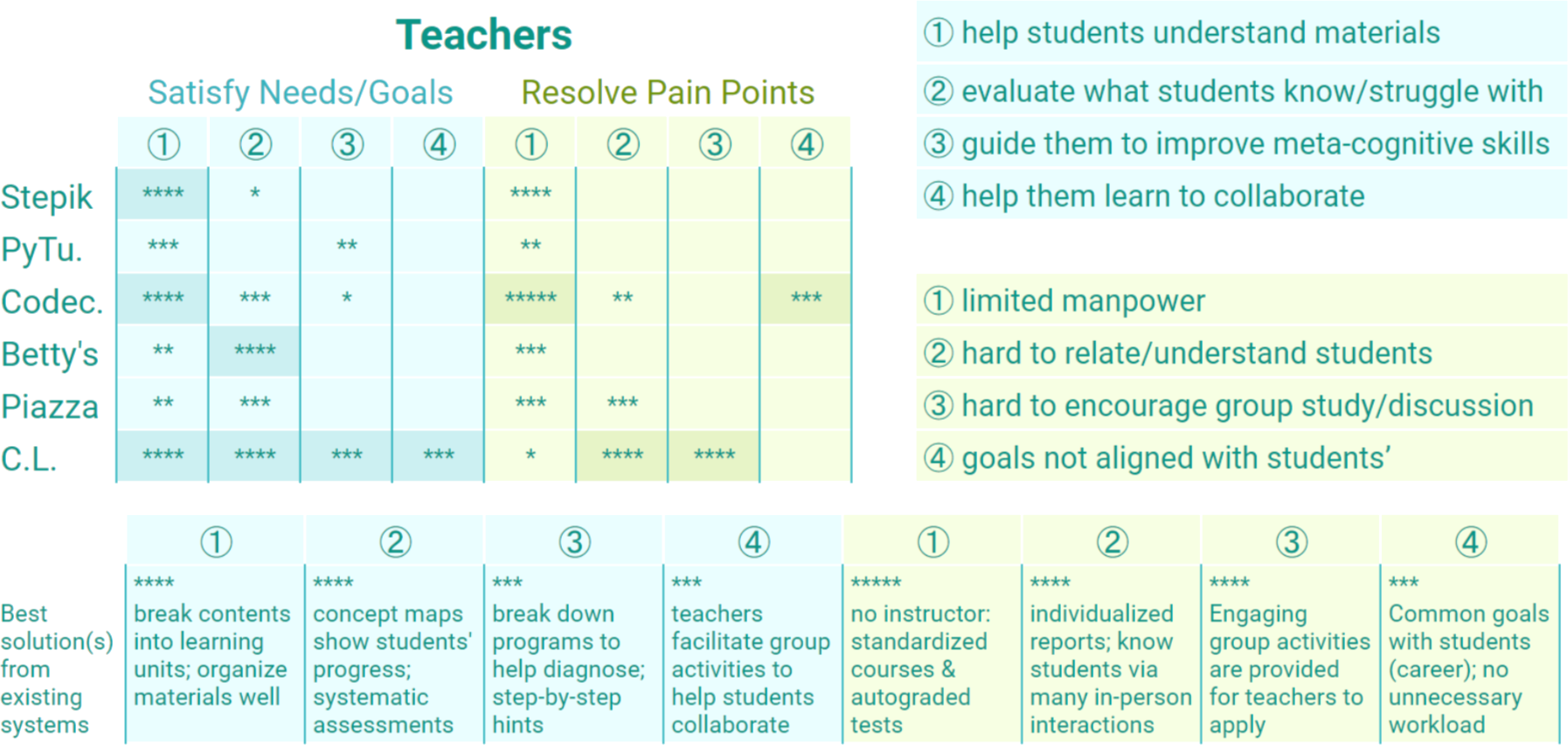

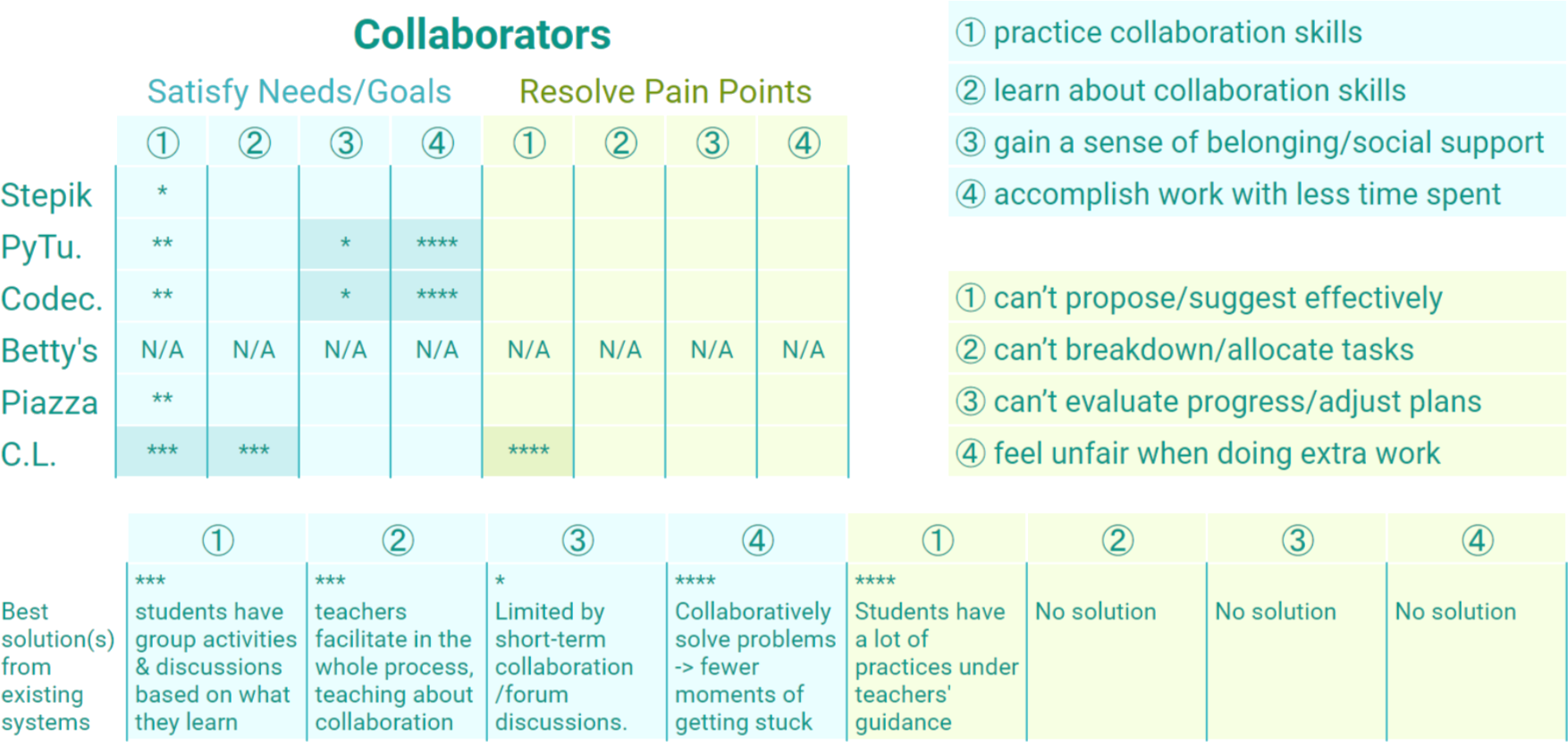

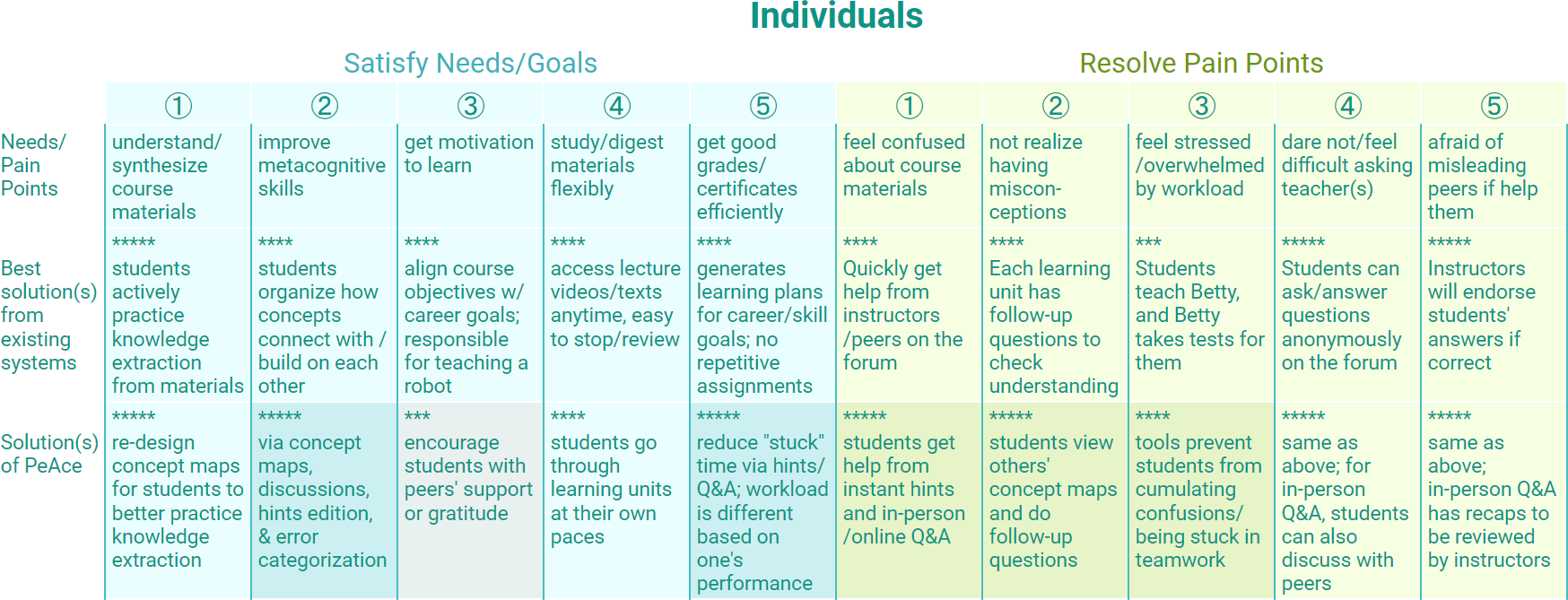

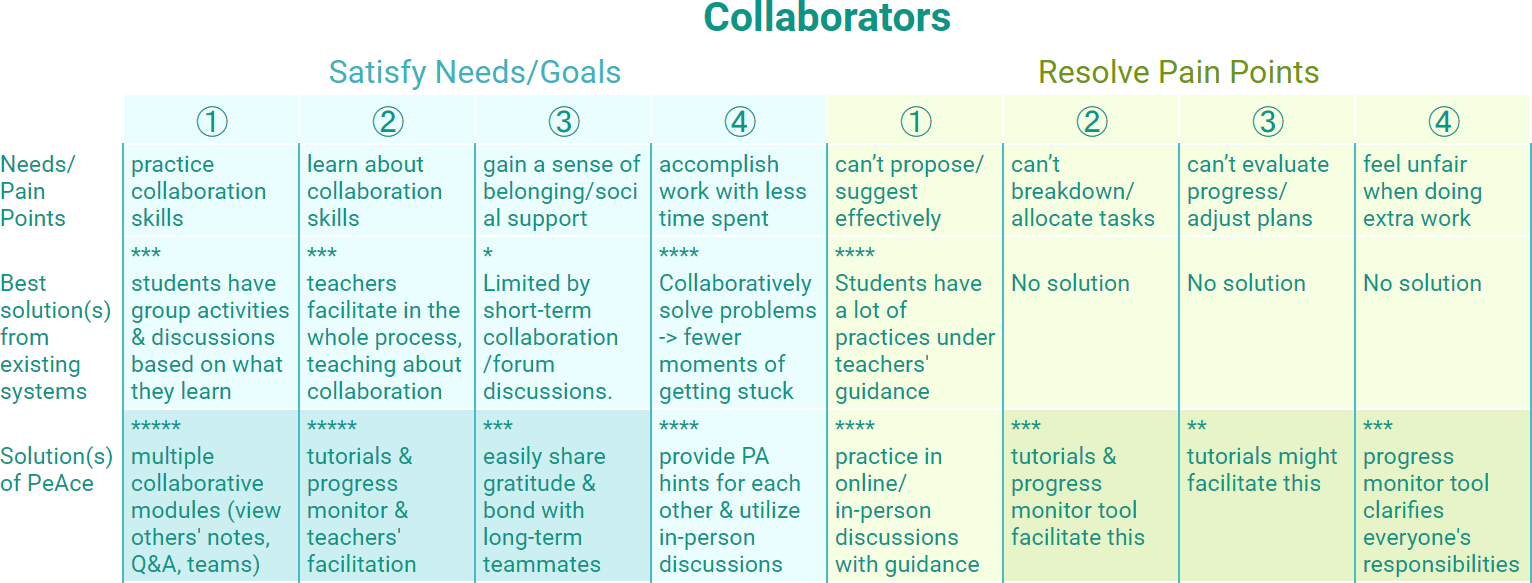

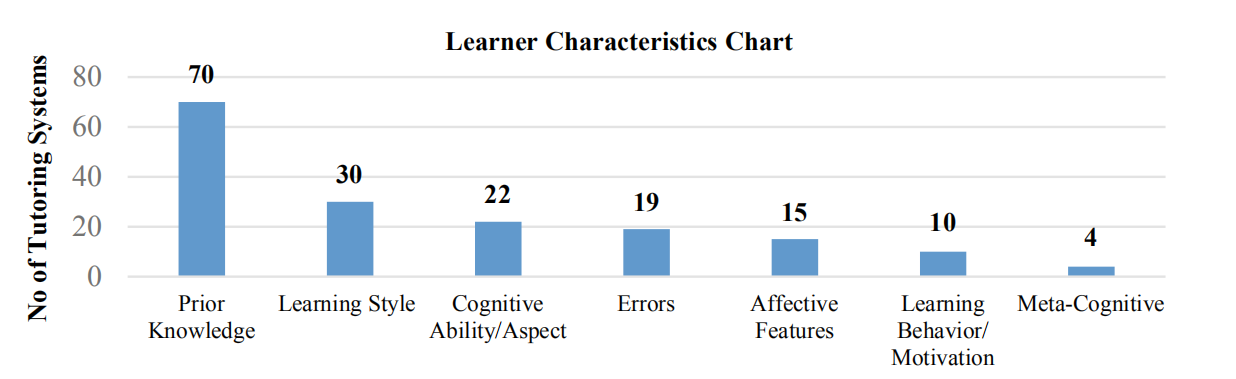

I tried to empathize with different agents’ possible needs/goals and pain points in the table below.

In the following Inspiration Board, I evaluate how existing ITS/CLS platforms address the needs or avoid the pains, seeking inspiration from their design solutions.

Inspiration Board

I focus on the

platforms that deal with coding or logical thinking instead of the ones that simply provide definitions or facts

because the learning tasks that require students to work on multi-step problems are more likely to improve their meta-cognitive skills. I reviewed about 10 platforms and evaluated the most representative ones below. The * indicates the extent of satisfying needs or resolving pain points, where ***** is the highest.

“Stepik is an educational engine and platform, focused on STEM open lessons. Stepik allows for easy creation of interactive lessons with a variety of automated grading assignments.” This is the opening introduction in their “Epic Guide to Stepik” official course, and their target customers are teachers instead of students [12]. Here’s the performance rating of Stepik:

Individuals

• Assignments/”steps” of lessons can be auto-graded for immediate feedback, but they don’t have step-by-step hints (*** understand/synthesize course materials)

• Non-interactive peer review: grade each other’s assignments; able to see different perspectives/approaches (* improve meta-cognitive skills)

• Certificates; knowledge points; competitions (*** get motivation to learn)

• Learn by accomplishing “steps”, more flexible and interactive than lectures; every concept has follow-up activities to help students enhance/examine their understandings (**** study/digest materials flexibly) (*** get good grades/certificates efficiently) (**** resolve: not realize having misconceptions)

• Can ask peers questions via comments / forum discussions; peers might answer out of altruism, but there is no further motivation (** resolve: dare not/feel difficult asking teachers)

Collaborators

• Communicate via comments / forum discussions (* practice collaboration skills)

Teachers

• Create “steps” for lessons with various activities or question types (**** help students understand materials)

• Access statistics of learners: only some general data that indicate completion rates or estimate how difficult a lesson is for students. Nothing about their mental states (* evaluate what students know/struggle with)

• For the assignments that can’t be auto-graded, can choose “peer review” instead of grading by themselves (**** resolve: limited manpower)

This is a free platform that visualizes 5 types of coding languages and provides a live help tool where volunteer users can help. Teachers are not necessarily an agent in this system, but they may use this platform for faster and easier demonstration of how code is executed or ask students to use it for assignments [13]. Here’s the performance rating of Python Tutor:

Individuals

• Visualize code to understand its logic; move the process bar to see each step (*** understand/synthesize course materials) (** study/digest materials flexibly)

• Learn by helping others and diagnosing code errors through visualization; but students are only motivated by altruism or passion of learning (*** improve meta-cognitive skills)

• Instant feedback & see how a step goes wrong (*** resolve: feel confused about course materials) (** resolve: not realize having misconceptions)

• Can ask peers questions via live help. Peers answer out of altruism, but because the user base is large, it’s easier to have volunteers (*** resolve: dare not/feel difficult asking teachers)

Collaborators

• The live help tool provides chat and displays different users’ mouses, so they can communicate and work on a problem together. (* gain a sense of belonging/social support)

• Because they work on a single problem without commitment, collaboration is supposed to save time instead of taking up more time due to team coordination (**** accomplish work with less time spent)

• Due to the reason above, the extent of practicing collaborative skills is limited (** practice collaboration skills)

Teachers

• Use the platform to demonstrate how code works; prompt students to try out different examples (*** help students understand materials)

• If encourage students to use this platform for assignments and help others: i) can save time from repetitively answering basic logic of code (** resolve: limited manpower) ii) visualizations help them diagnose their code; when students help others, they’ll also deepen their understandings (** guide them to improve meta-cognitive skills)

It’s an online interactive platform that offers coding classes in many programming languages. Overall it’s similar to Stepik, but the courses seem to have no human instructors; it has a forum where learners can communicate, and it has additional features in its business plan [14]. Here’s the performance rating of Codecademy:

Individuals

• Follow-up assignments after each lecture/lesson (*** understand/synthesize course materials) (*** resolve: not realize having misconceptions)

• Learn by helping others on the forum and becoming more aware of common mistakes. However, learners don’t seem to have motivation; most answers on the forum are provided by moderators (** improve meta-cognitive skills)

• Learn at one’s own pace (**** study/digest materials flexibly)

• Learners can select a career/skill path to receive a learning plan (**** get good grades/certificates efficiently); they can also work on real-world projects that add to their resume. (**** get motivation to learn)

• Instant autograde & hints. Some users reported that the hints weren’t helpful, but they can ask for additional help from forum moderators (**** resolve: feel confused about course materials)

• Posting questions on the forum might be less scary/stressful than asking teachers, and the moderators answer quickly (**** resolve: dare not/feel difficult asking teachers)

Collaborators

• The forum allows users to share finished projects (instead of recruiting teammates) or help each other on coding problems. (* gain a sense of belonging/social support)

• Peer instructions or the help from moderators make problem-solving more efficient (**** accomplish work with less time spent)

• Since there’s no team commitment, the extent of practicing collaborative skills is limited (** practice collaboration skills)

Teachers

• The design of those course materials and hints have been iterated for years based on users’ feedback, or big data; however, it seems that they don’t evaluate individual students for in-course iterations (**** help students understand materials) (*** evaluate what students know/struggle with) (** resolve: hard to relate/understand students)

• The non-real-time process of discussing problems with moderators (teacher) or peers might help students learn to diagnose/solve problems independently, but to a limited extent (* guide them to improve meta-cognitive skills)

• As long as a course is designed, everything is automated; only the moderators (teachers) provide help on the forum (***** resolve: limited manpower)

• The platform generates learning plan with minimum workload according to a learner’s goal, which helps align the course objectives with students’ goals (*** resolve: goals not aligned with students’)

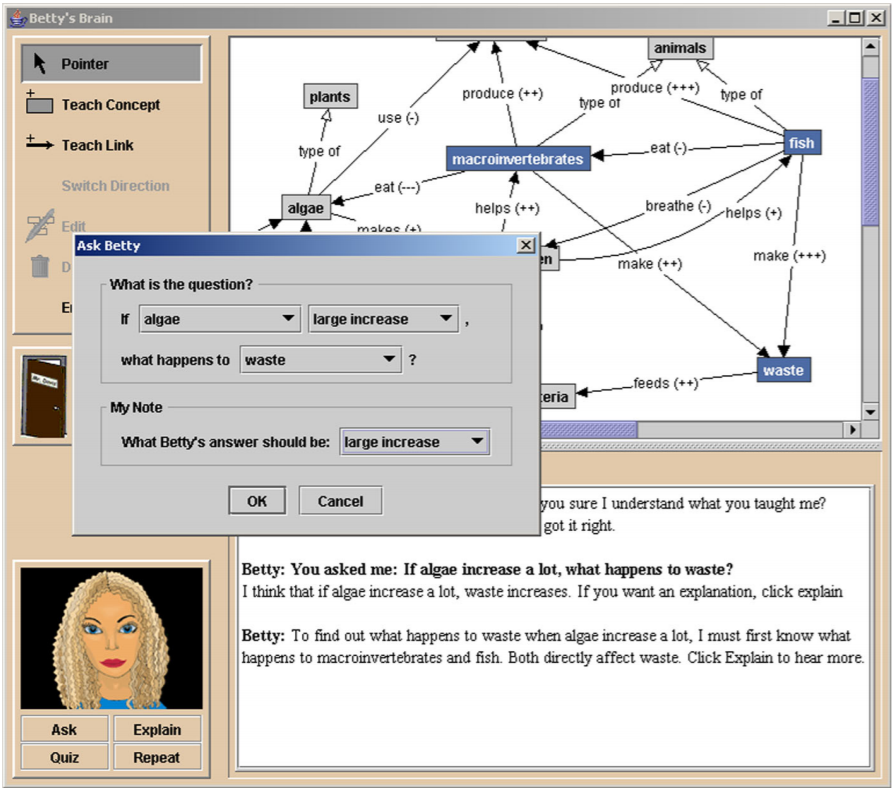

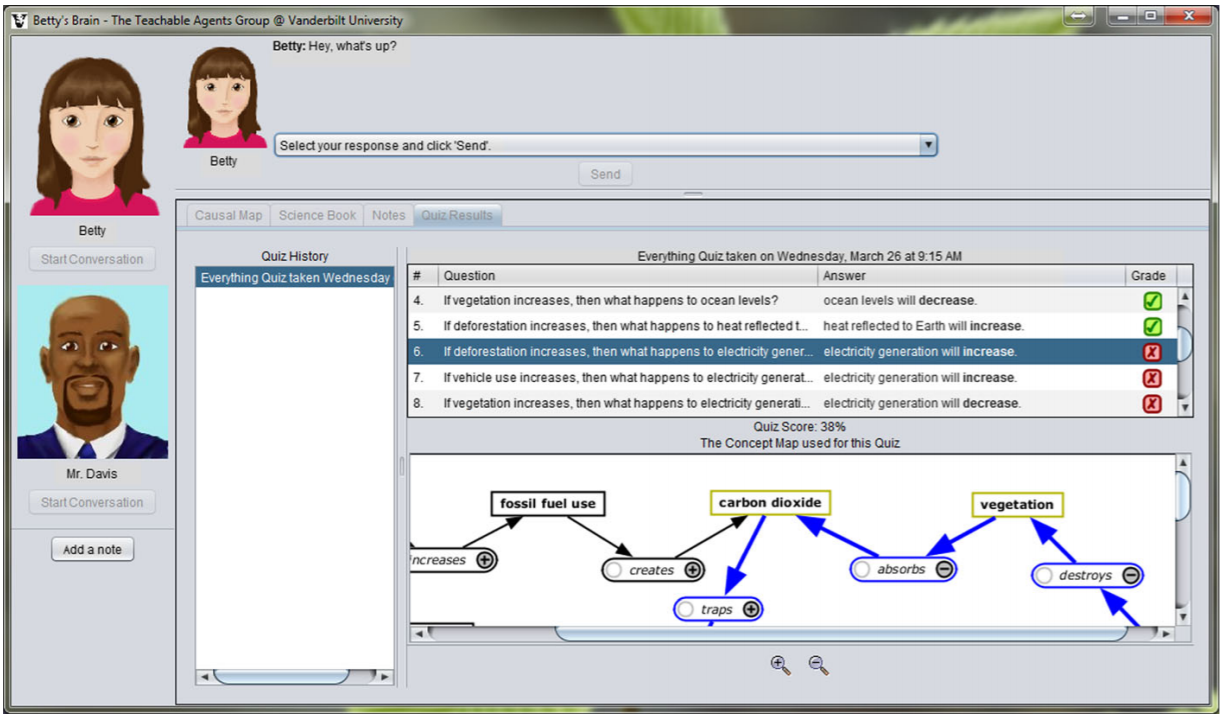

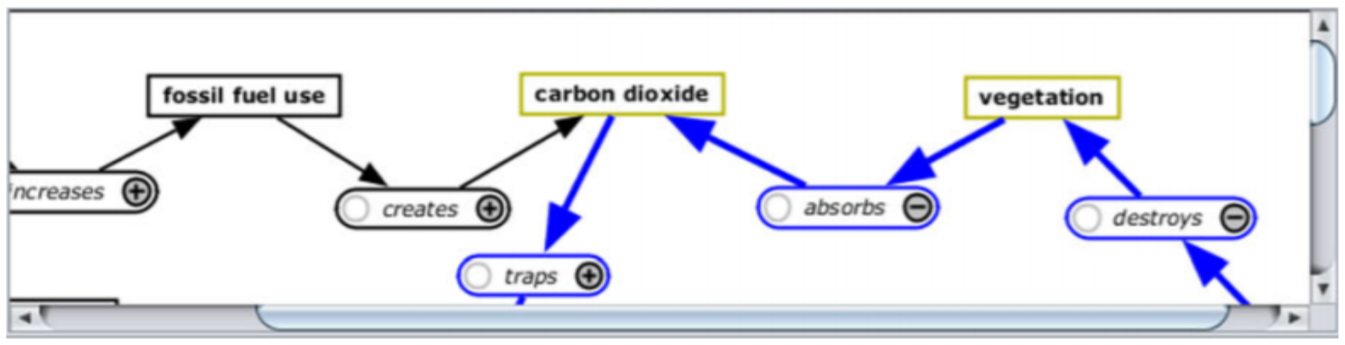

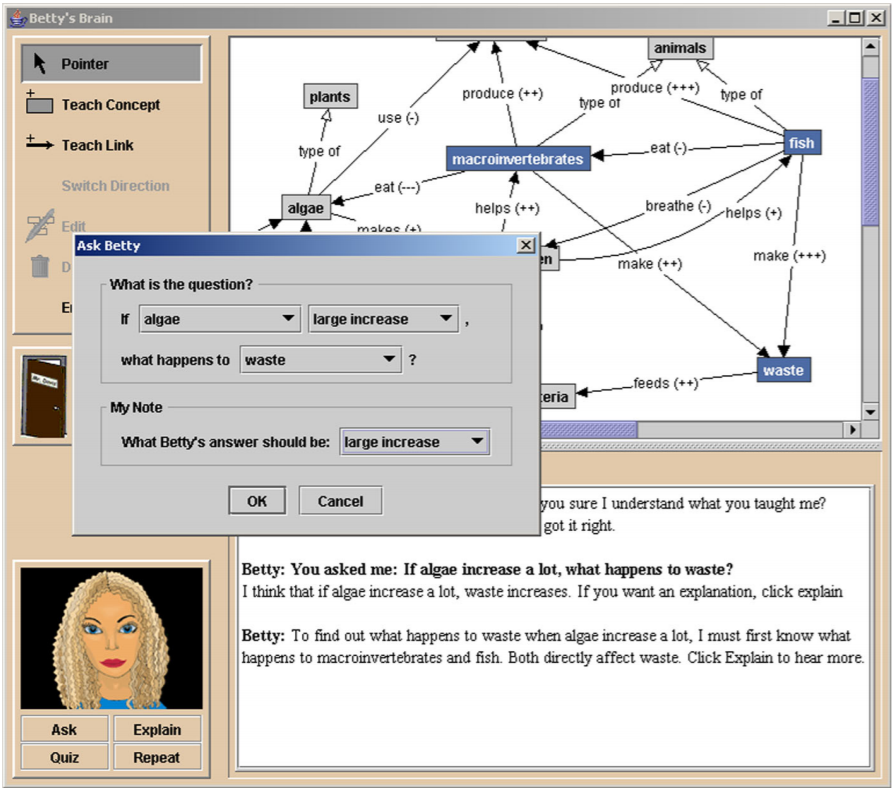

It’s a computer-based learning environment with over 10 years of research, prompting students to learn by teaching a virtual agent named Betty [15]. In the original version, students can use the interface below to i) add concepts to Betty and link them correctly ii) ask Betty questions to see what she knows, and iii) let Betty take quizzes provided by a mentor agent.

Reproduced from figure 1 from [15]

For adding and linking concepts, students utilize a “concept map.” After adding concepts, students can click the “Teach Link” button to drag the pointer from a start node to a destination node. A link appears along with a dialog box and students then enter specific details about the relation into this box. Relations can (i) hierarchical type-of, (ii) descriptive, and (iii) causal.

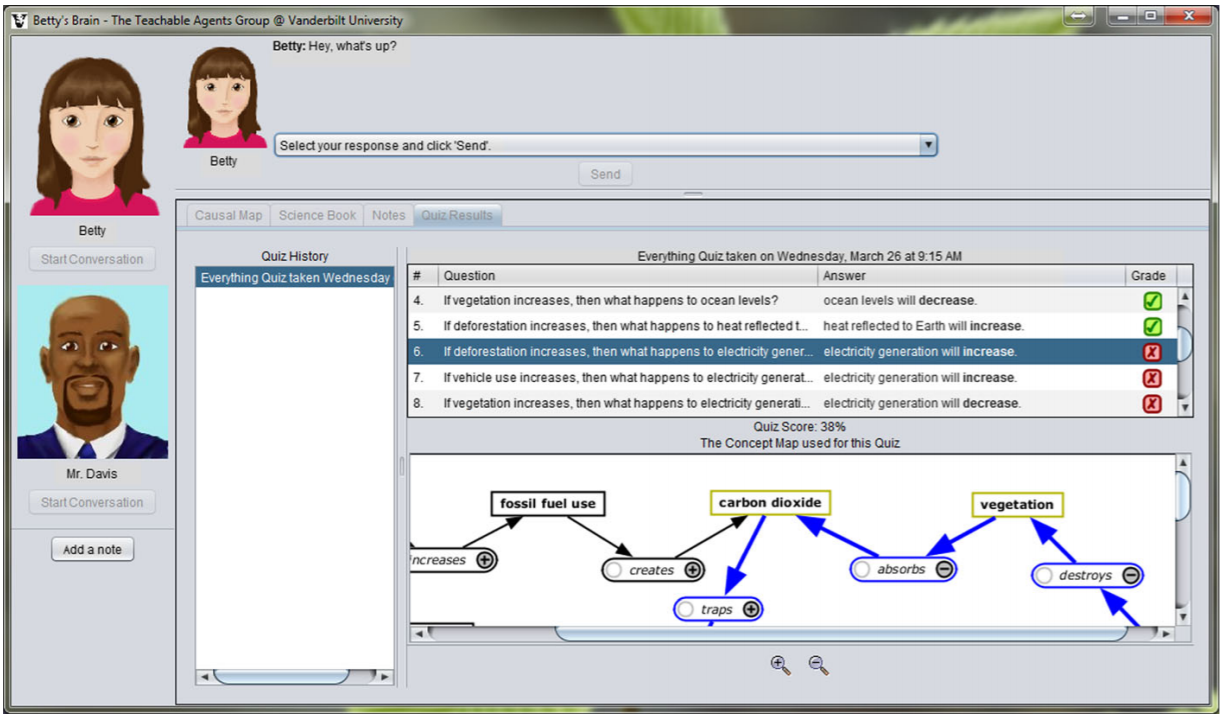

After the researchers integrated this system into middle school science classrooms, they found a dichotomy in students' performances due to their different meta-cognitive skills; 18 of 40 (45%) students made little progress, while 15% made great progress. The researchers tried to provide meta-cognitive strategy suggestions, but they found that 77% of the feedback was ignored by students. They redesigned the model with the new interface below.

Reproduced from figure 3 from [15]

Their focus was to understand why certain students made little progress, and they defined five clusters of students such as “careful editors,” “confused guessers,” “disengaged from tasks,” etc. However, future work is needed to understand how to help the students from other clusters become “careful editors.” Here’s the performance rating of this system:

Individuals

• Based on the resources/texts provided, edit concept maps by adding and linking concepts (***** understand/synthesize course materials) (**** improve meta-cognitive skills)

• Students are prompted to organize the concepts more carefully in order to teach another, perhaps due to the shared responsibility (**** get motivation to learn)

• Learn/edit concept maps at one’s own pace; however, the current interface doesn’t demonstrate students’ progress and they might lose track (*** study/digest materials flexibly)

• Let Betty take the quiz; however, it’s self-paced, so students might not discover misconceptions immediately (** resolve: not realize having misconceptions) (*** resolve: feel stressed/overwhelmed by workload)

Collaborators (No human collaborators in this system)

Teachers

• Can design the resources/text provided for students. However, the resources themselves might not be interactive/engaging, and no additional instruction is provided (** help students understand materials)

• Can utilize the concept map of each student to understand what he/she knows, but it’s hard to identify what the student struggles with (**** evaluate what students know/struggle with)

• Only need to provide guidance when students need help, since they learn independently for the most time; need to design quizzes for autograding (*** resolve: limited manpower)

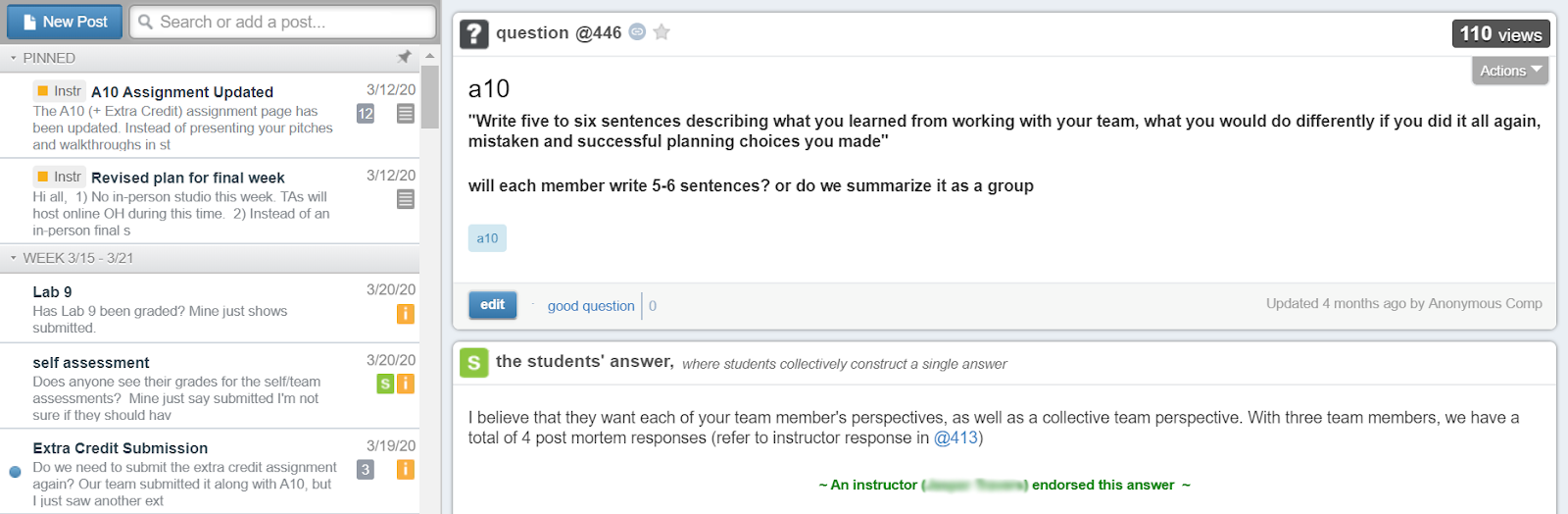

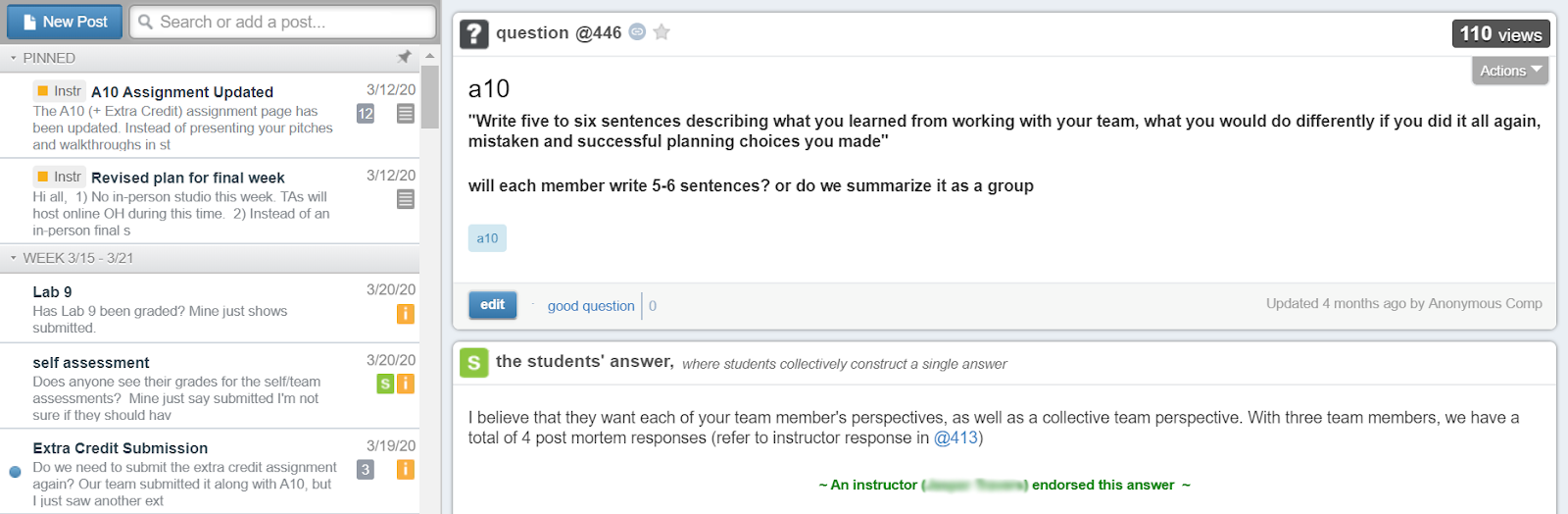

It's a Q&A platform that students can ask/answer questions anonymously, and TA/professors can endorse students’ answers and provide answers by themselves [16]. When students type similar questions, the platform will display relevant former posts; also, users can vote for “good questions” or “thanks” which motivate others to ask & answer more. Here’s the performance rating of Piazza:

Individuals

• When students help each other, they solidify their knowledge; however, the motivation from altruism and “thanks” might be limited for this action (*** improve meta-cognitive skills)

• Instructors can endorse students’ answers or provide additional explanation. Students can ask/answer anonymously, less worried about asking a “dumb” question or being blamed for wrong answers (***** resolve: afraid of misleading peers if help them) (***** resolve: dare not/feel difficult asking teachers)

• Asking questions on the forum is more convenient than going to office hours or sending emails. Also, they can easily find answers if there were similar questions being answered before (**** resolve: feel confused about course materials)

Collaborators

• No real collaboration for solving a problem, since it’s Q&A. However, students can practice communication skills, which is one of the collaboration skills. (** practice collaboration skills)

Teachers

• Since more students are asking questions more often, teachers have more opportunities to help them better understand materials (** help students understand materials)

• The platform has a lot of data about students’ confusion, but it doesn’t utilize the data to generate any report; teachers might be able to explore students’ confusions by browsing, but this isn’t the optimized way. (*** evaluate what students know/struggle with) (*** resolve: hard to relate/understand students)

• No need to answer repetitive questions since the platform links similar questions together. Also, can endorse students’ answers. (*** resolve: limited manpower)

Their website said a research proved that their blended approach nearly doubled growth in performance on standardized tests relative to typical students in the second year of implementation [17]. Their blended approach includes “engage”, “develop”, and “demonstrate”. Due to the limited access to their sample center, I could only learn about their methods from their promotion videos and advertisements. Here’s the performance rating of Carnegie Learning:

Individuals

• Based on the systematic assessments, AI provides 1-to-1 math coaching which boosts the efficiency of learning (**** understand/synthesize course materials) (**** get good grades/certificates efficiently)

• Students receive step-by-step hints in assignments (*** improve meta-cognitive skills)

• The learning process is engaging because of individual and peer activities (*** get motivation to learn)

• Students can easily receive help from AI tutor or instructors (*** resolve: feel confused about course materials)

• Students receive systematic assessments for each topic (*** resolve: not realize having misconceptions)

Collaborators

• Students are engaged in activities based on math problems, and the teachers will guide them to collaborate; however, there doesn’t seem to be long-term teamwork or in-depth collaboration. (*** practice collaboration skills) (*** learn about collaboration skills) (**** resolve: can’t propose/suggest effectively)

Teachers

• With the bank of assessments and the detailed reports of students, teachers are able to better understand what students know and help them correspondingly. (**** help students understand materials) (**** evaluate what students know/struggle with) (**** resolve: hard to relate/understand students)

• Teachers focus on helping students understand the mathematical thinking process and improve problem solving skills; I’m not sure how exactly they achieve it, but they highlight this point in their advertisement (*** guide them to improve meta-cognitive skills)

• Teachers teach students to collaborate in engaging activities (*** help them learn to collaborate) (**** resolve: hard to encourage group study/discussion)

• Although many materials and tools are provided for the teachers, it still requires a lot of manpower. (* resolve: limited manpower)

Summary

According to my analysis above, I summarized each platform’s performance with respect to each need or pain point in the figures below. The platforms with the best design solutions are marked with darker colors.

In designing for individuals, we see that there are already reasonably strong solutions across the six platforms for each need and pain point; however, no one platform or system addresses all of these needs/pains sufficiently. In my design, I will

build upon the existing strengths of platforms to address all the needs/pains of individuals.

When it comes to satisfying the needs/pains of teachers, existing platforms address most of them but struggle when it comes to facilitating students’ meta-cognitive skill improvement (need - 3) and collaboration (need - 4). Further, existing platforms often fail to sufficiently align the instructors’ goals with students’ coals (pain - 4). These limitations of existing platforms highlight the

areas for improvement to be addressed with my design.

A key finding is that the

needs or pain points of collaborators were barely addressed, which demonstrates that a new platform is needed to help students effectively collaborate. I notice there is a tension between in-depth collaboration and the goal of “get good grades/certificates efficiently,” because collaboration requires time and effort to learn about not only the course materials, but also the thoughts & thinking process of other collaborators.

Based on these existing solutions, I began to brainstorm

how I might integrate collaborative learning while preserving or even reducing students’ workload, and how this integration might effectively improve students’ meta-cognitive skills. Eventually, I came up with the prototype below.

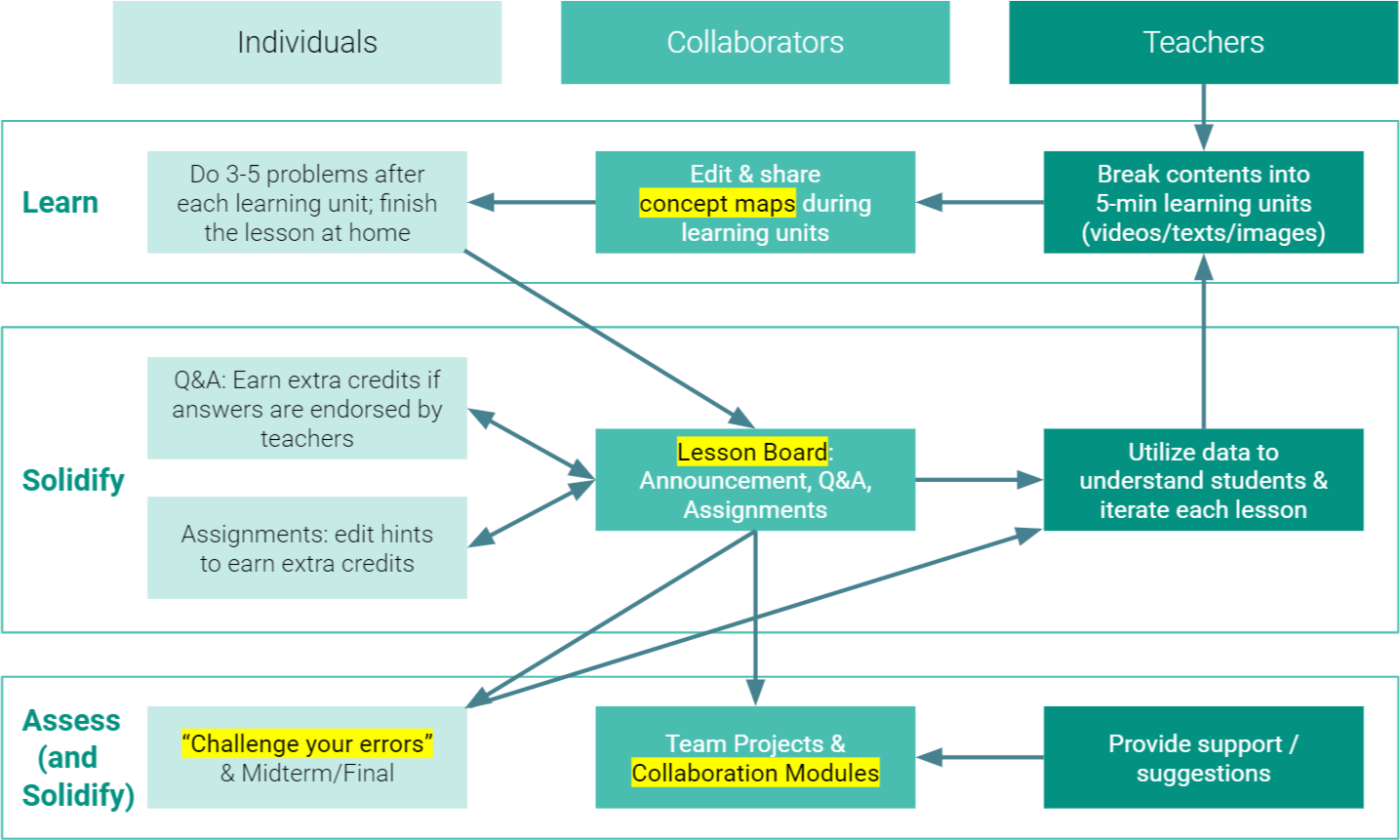

System Framework

My proposed prototype is a computer-based learning system that can be used to meet the needs and goals of both a remote learning environment, where flexibility is required , and an in-person course. It focuses on courses that deal with logical reasoning and creative problem solving (eg. most STEM courses) in order to improve students’ meta-cognitive skills. Below is a prospective framework of this system,

PeAce (Peer-oriented AI-based Collaborative Educational System).

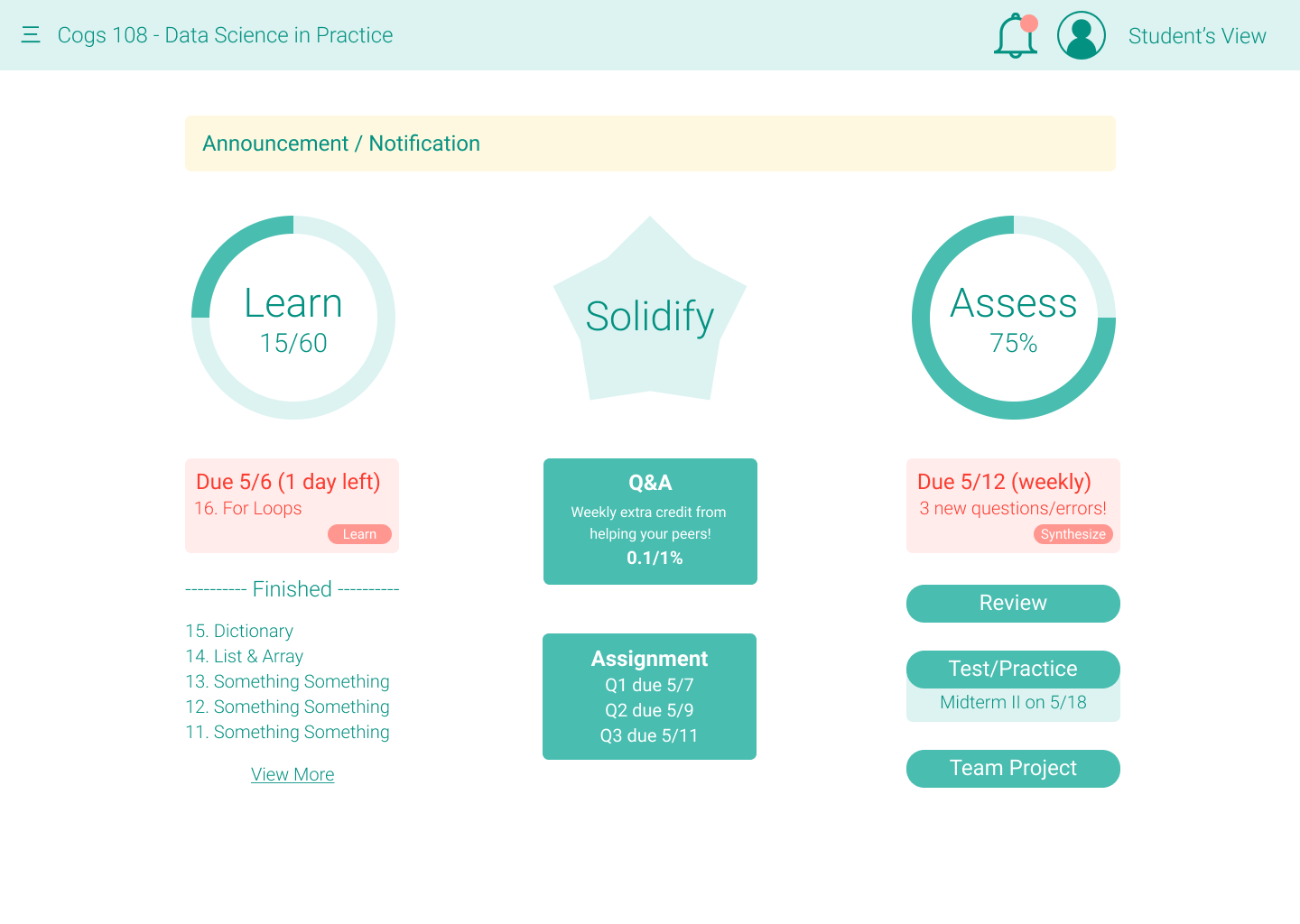

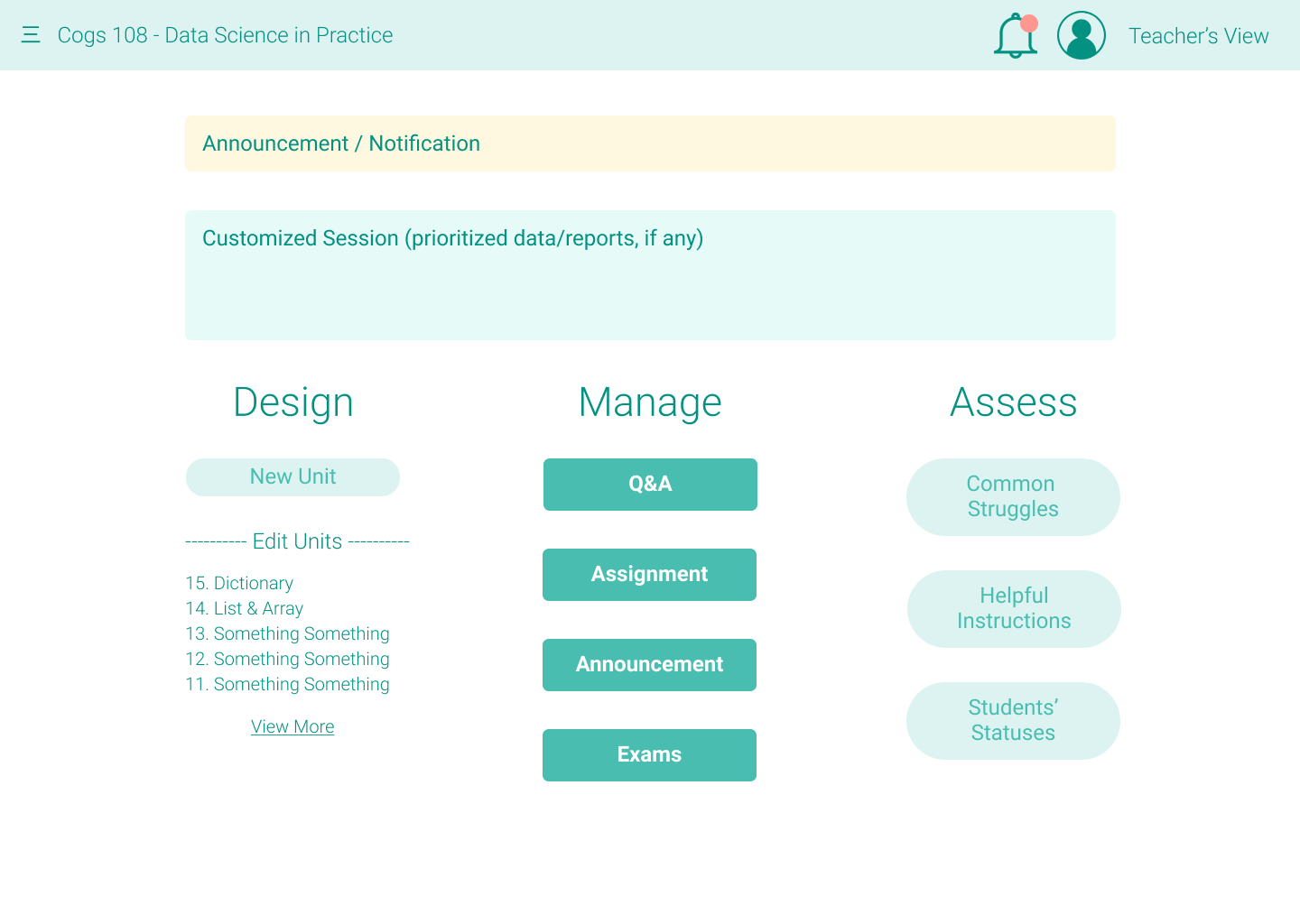

Compared with the majority of existing systems, this framework has four highlights: concept maps, lesson board, “challenge your errors”, and collaboration modules. To help you visualize this framework from users’ views, below are the home screens for students/teachers.

In the sections below, I'll illustrate the four highlights (concept maps, lesson board, “challenge your errors,” and collaboration modules) in detail and identify the potential data/reports that PeAce may provide for teachers.

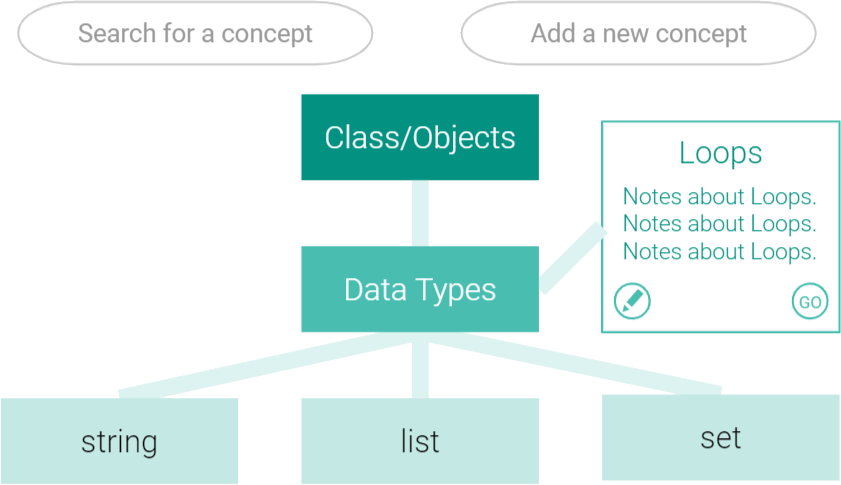

I. Concept Maps

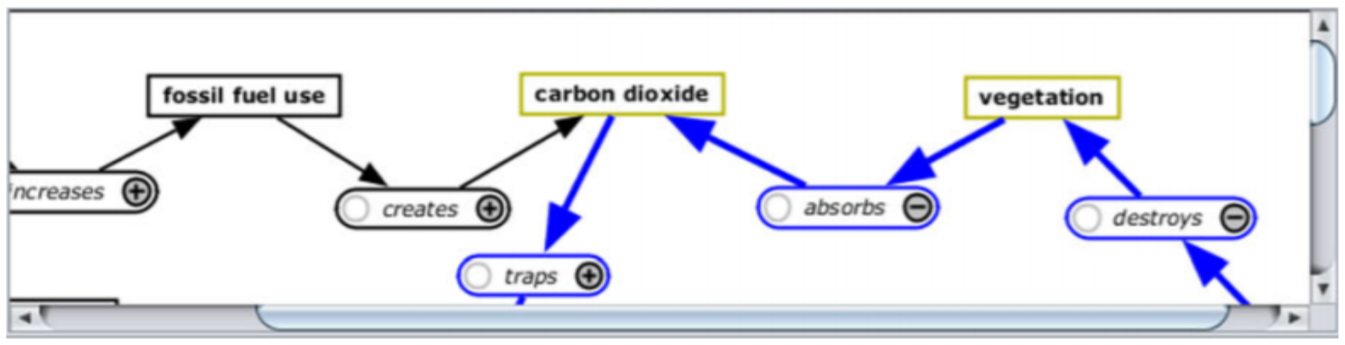

The idea of using “concept maps” as notebooks was inspired by the Betty’s Brain system, where different concepts can be connected via hierarchical, descriptive or logical relationships, just like a network of flashcards. I chose to build upon this design because

it facilitates student practice in the two core meta-cognitive skills (“Identify” and “Diagnose”) summarized in the “Literature Review” section.

Reproduced from figure 3 from [15]

I modified this design to improve user experience:

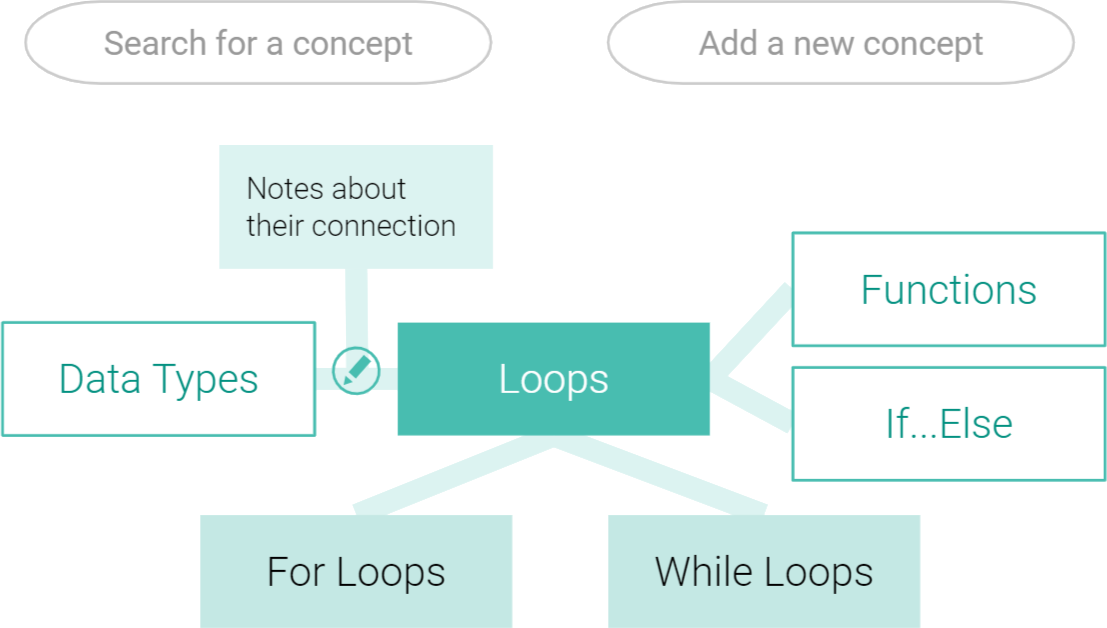

the notes and buttons display only when users hover on the concept (left figure) or link (right figure); when users click the “go” button on a concept (eg. “Loops” - left figure),

the concept map moves (“Loops” is centered - right figure). The vertical positions indicate hierarchical relationships that student editors define, and

students edit these maps during learning units (while watching lecture videos, etc).

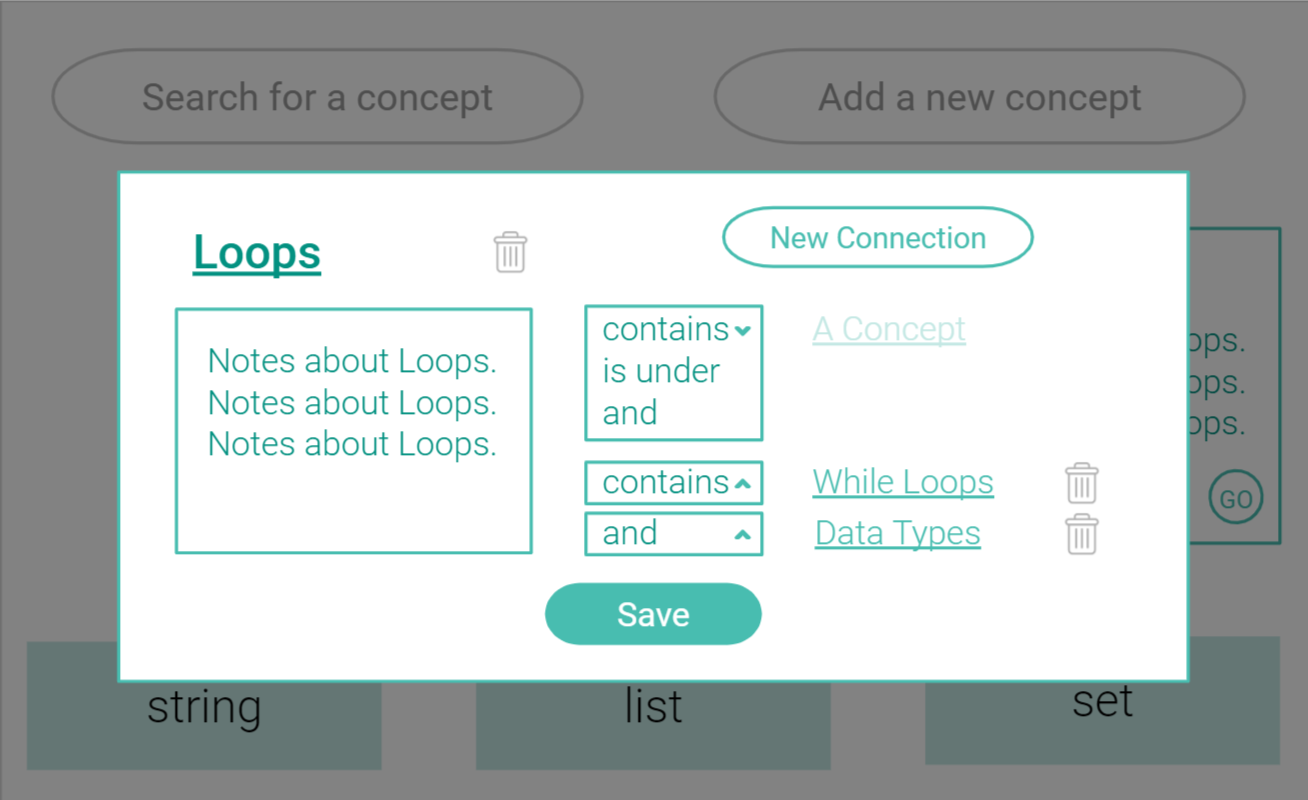

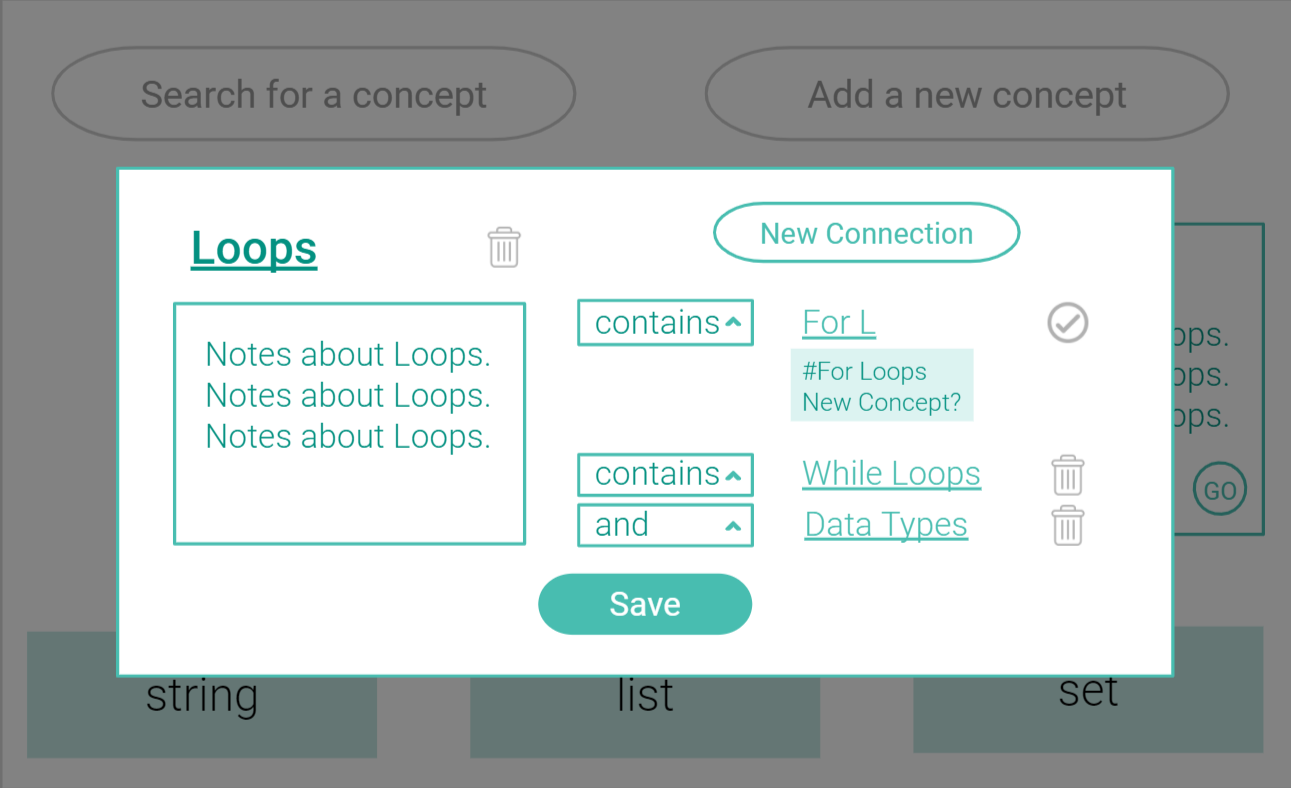

Editors can click either concept to edit its connection with another one, as the figures below illustrate. The edition will be automatically synced for both concepts.

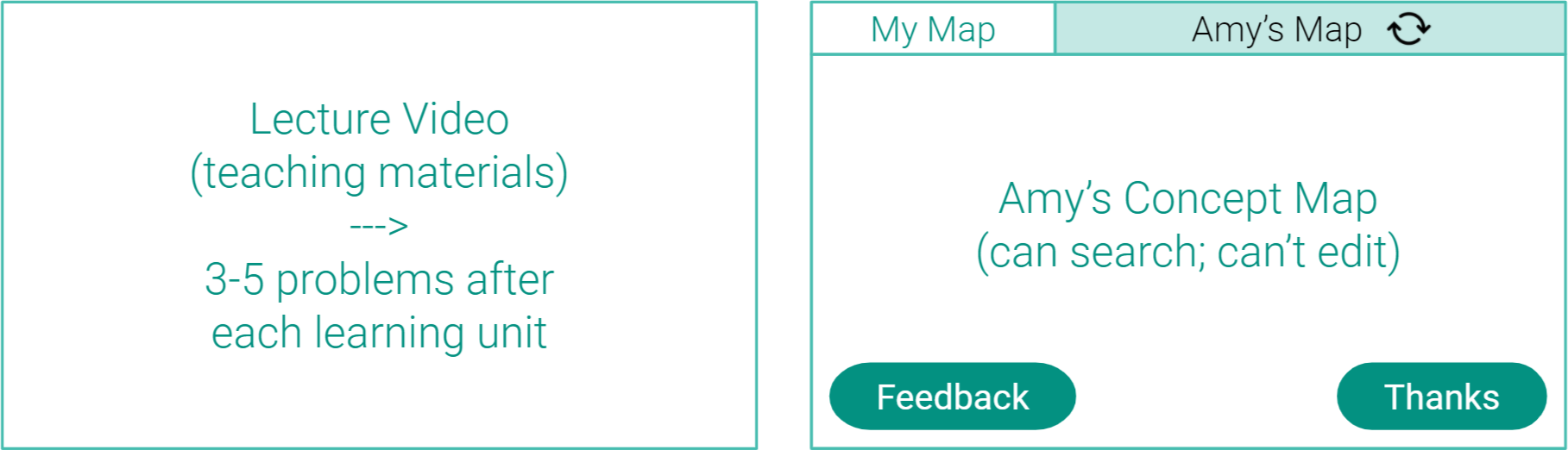

To enable collaboration while minimizing students’ tendency to contribute less, my design strategy is that

every student still has one’s own concept map and they can’t copy texts from others’. They can only switch to view others’ maps, give thanks/feedback, and modify their own maps.

Because students have different levels of meta-cognitive skills, they might extract different amounts of knowledge from the same teaching materials. This design

reduces students’ gaps of knowledge extraction by helping them to learn from both lecture videos and what other students have learned. The “feedback/thanks” makes this editing process more interactive, giving students constructive advice and emotional rewards from peers.

Besides peer interactions, another motivation for students to thoughtfully edit concept maps is that

their maps will be their cheat sheets for assignments or tests. Nowadays, the tremendous accessibility of knowledge decreases the importance of memorizing basic facts or formulas; with concept maps as cheat sheets,

students can focus more on synthesizing different concepts and solve complex problems in creative ways.

II. Lesson Board

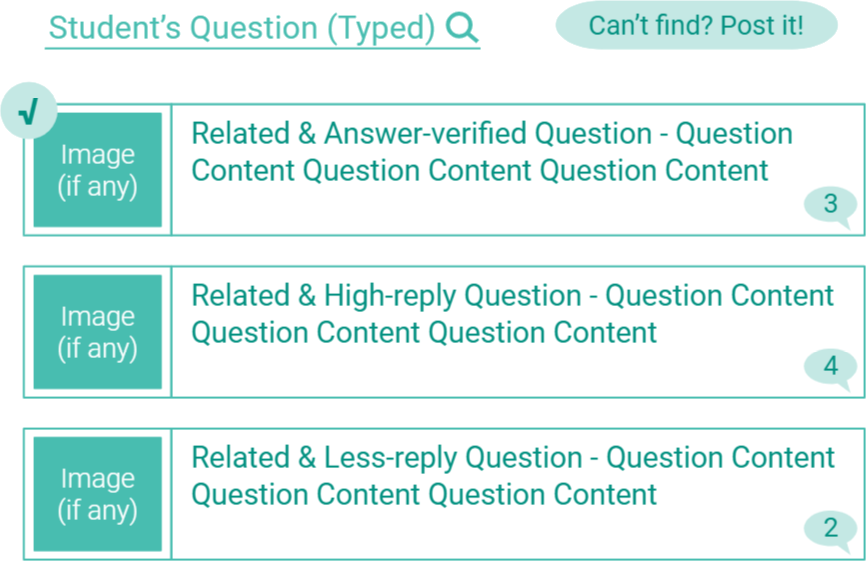

The Lesson Board of PeAce has three components: Announcement, Q&A, and Assignments. Announcement is where teachers post logistic information and students can ask questions if any; it’s well-developed in existing systems, so I’ll skip discussing it. My design of the Q&A component is based on Piazza, where

students can ask/answer questions anonymously and teachers can endorse students’ answers.

Reproduced from Piazza

I modified its design from these perspectives: 1) Motivation. For students,

the number of their answers endorsed by instructors will be counted and linked to extra credit. Piazza only displays “endorsed,” which provides limited motivation. 2) User experience and layout.

“New Post” button is hidden in order to minimize similar questions; however, if students click “Can’t find? Post it”, what they type in the search bar will be automatically copied for further editing.

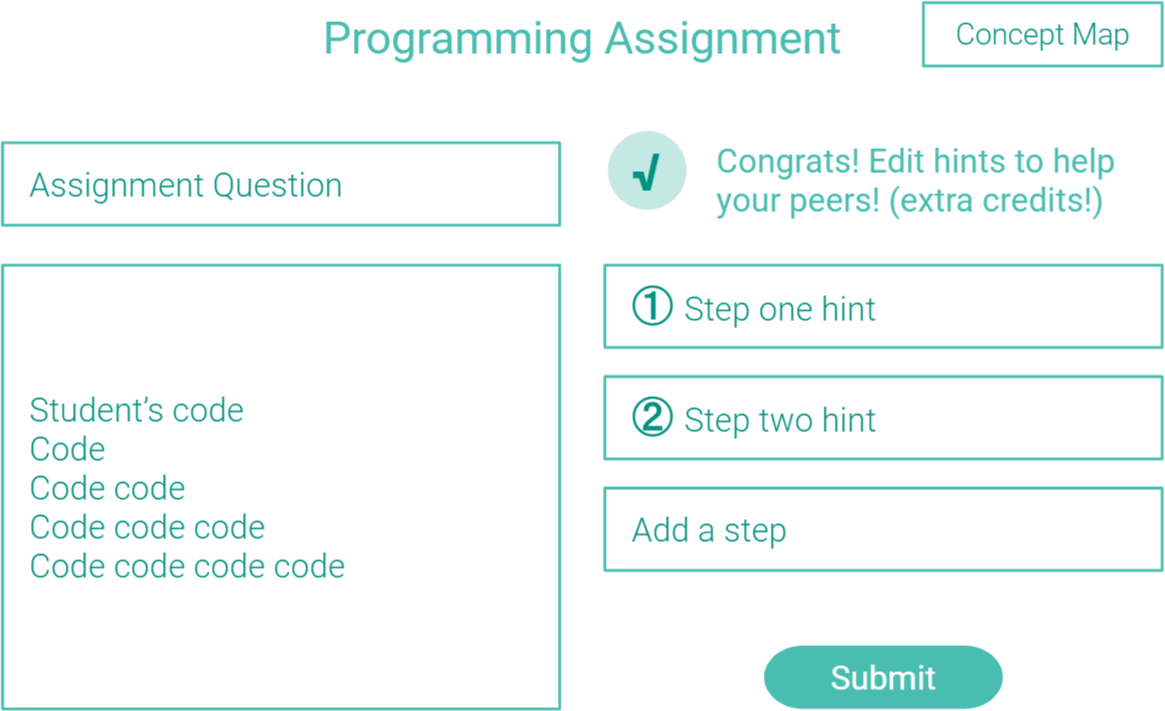

For the Assignment component, one highlight of PeAce is that

students can edit step-by-step hints for others after they successfully complete a question (figure on the left). When a student is working on a question, this student can

get a hint every 90s and choose to “like” or “switch” hints if they’re hard to understand (figure on the right).

There is a trade-off between helping students move forward with hints & preventing them from relying on hints. The “switch” function (figure on the right) is designed to help hint receivers move forward in case that they see “unhelpful” hints; however,

only the same number of steps (eg. two steps) will display after they switch hints, and there’s a word limit for each step to prevent hint editors from hinting too much. Also, every time when hint editors submit a hint, a claim about academic integrity will pop up, preventing them from directly revealing answers.

III. Challenge Your Errors

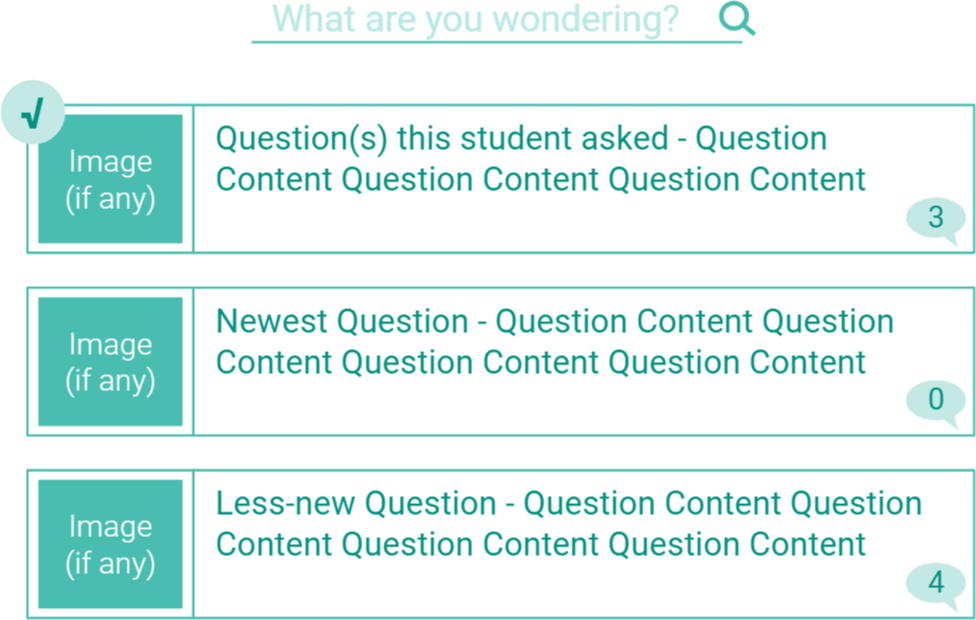

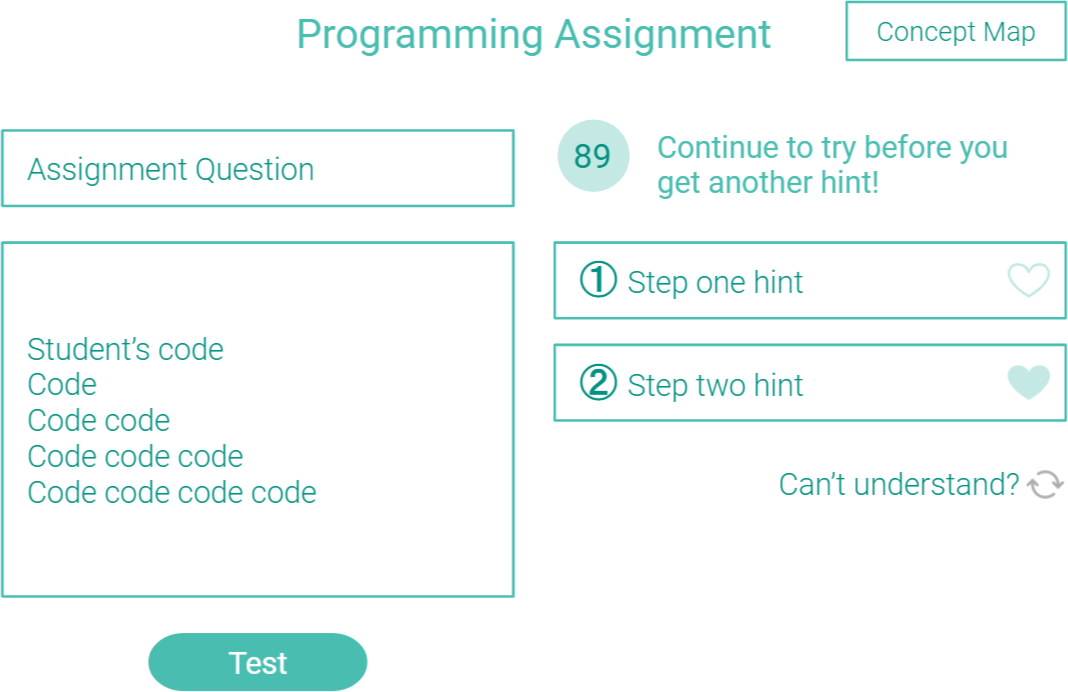

“Challenge Your Errors” is a module that helps students discover and resolve misunderstandings in a more individualized way. It has two components: Analyze and Test.

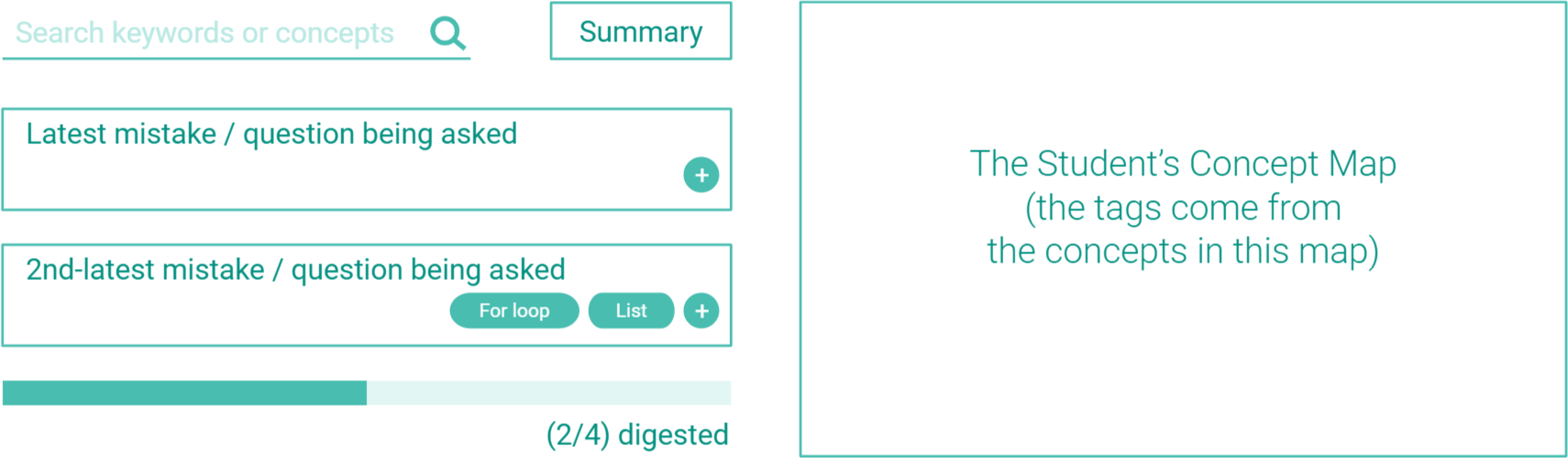

The Analyze component collects a student’s questions in the Q&A board and this student’s mistakes from learning units, assignments, and tests.

Students need to tag related concepts onto each mistake/question, and they can add corresponding notes onto their concept maps;

this will be a mandatory weekly assignment to help students digest materials in time. After students click “Summary,” a list of tagged concepts will appear according to their frequency of being tagged (from high to low); by searching a certain concept, students can also review all the mistakes/questions related to that concept, diagnosing their confusion.

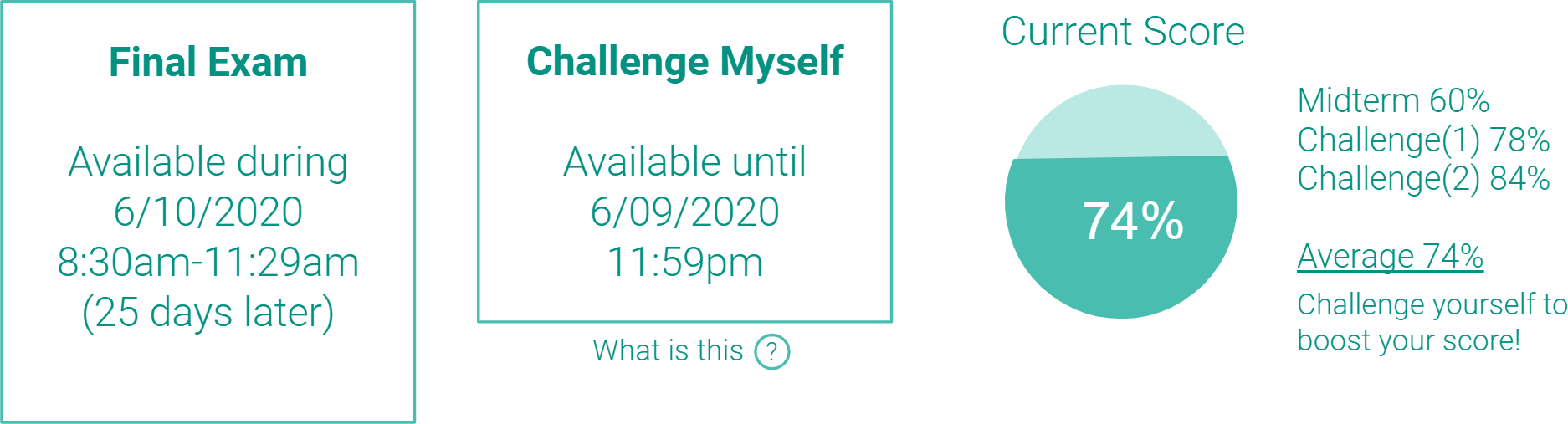

The Test component provides two types of tests: 1) Midterm/Final: questions are selected from a pool of summative questions designed by teachers. This type only gives one attempt. 2) Challenge Myself:

questions are the variations of a student’s past mistakes, which can be generated and autograded by AI. This type can be taken as many times as the students want before the day of final exam.

The final Test Score is recommended to be calculated as below (but flexibility is allowed):

Test Score = Max { (MidtermScore + FinalScore + CM

1 + CM

2 + … + CM

n ) / (2 + n) ; FinalScore }, where n is the total number of “Challenge Myself” tests that a student takes, and CM

n refers to the student’s score in the nth “Challenge Myself” test.

This design aims at

satisfying students’ goal of getting good grades efficiently while ensuring that they reach the learning objectives established by teachers. Students who master the materials and get good Midterm scores can take fewer “Challenge Myself” tests, while those who need to work harder can study more and take more tests.

No score will be dropped unless technical issues occur, so students need to get themselves ready before taking each “Challenge Myself” test. Also, they need to read the severe consequences of cheating and sign for Academic Integrity before every test.

These design considerations prevent students from misusing this opportunity of taking more tests.

IV. Collaboration Modules

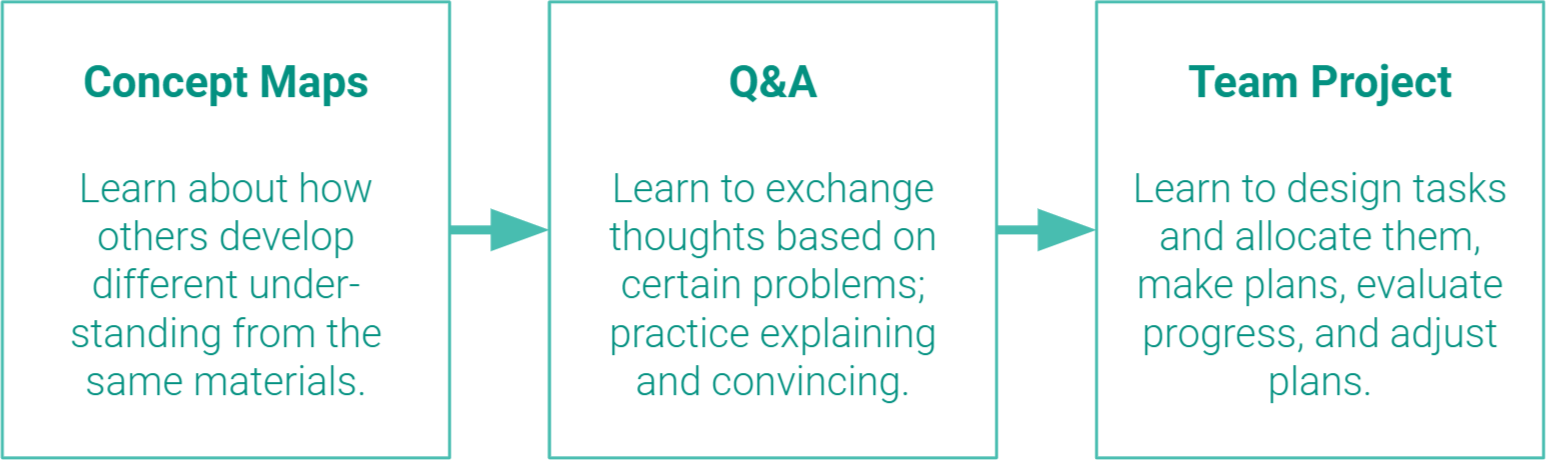

Collaboration modules are integrated into three parts of PeAce, including the shared concept maps, the Q&A (from Lesson Board), and the team project (to be introduced). Each part teaches or trains students on different collaborative skills, as indicated below.

Concept maps facilitate collaboration by exposing students to others’ mental models (represented by their concept maps), which serves as a “trailer” for students before they encounter more different ideas in Q&A and Team Project.

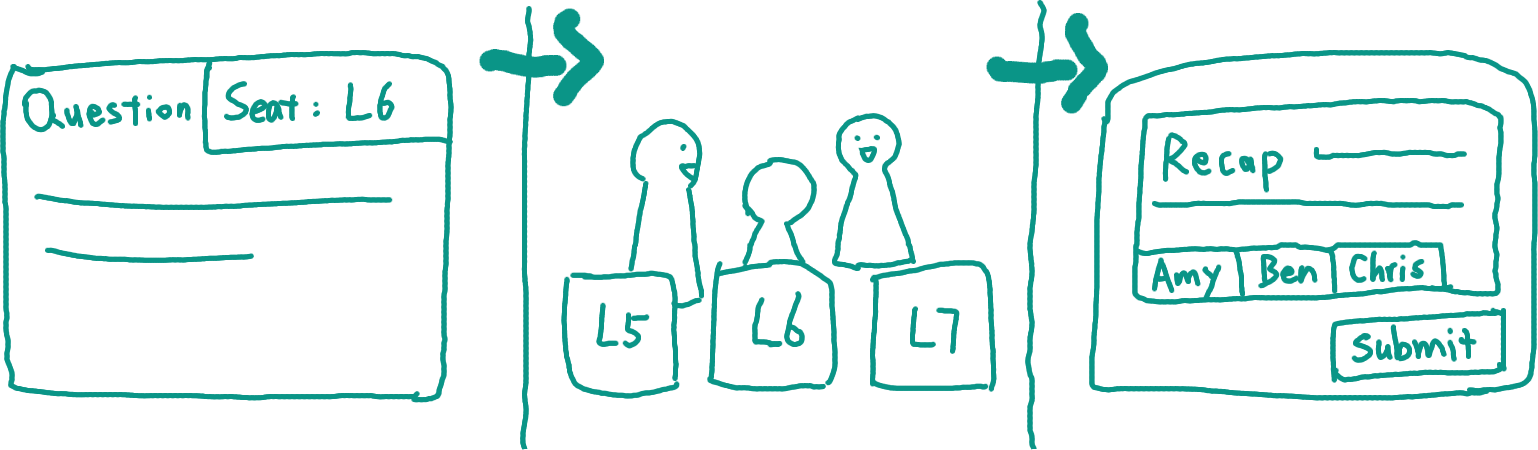

The Q&A can be used online or in-person with different formats, while

the in-person format benefits students more because speaking is more efficient than typing, and the back-and-forth communication helps students detect and fix more errors. The in-person Q&A is designed to take place in a classroom with seat numbers; when students post questions, they need to indicate a seat number so others can come to discuss; teachers can help when needed. After figuring out the answer, everyone involved in the discussion should collaboratively write a recap about their process and conclusions. If this recap is endorsed by teachers, then everyone gets extra credits.

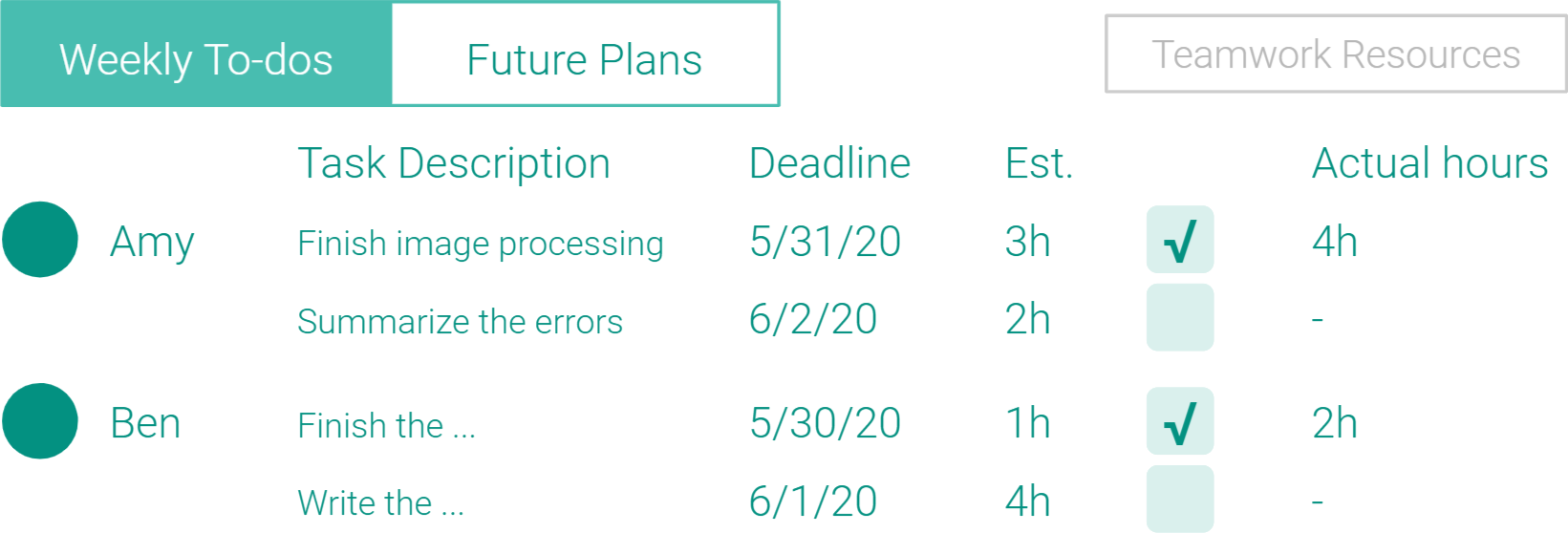

Team projects require advanced collaboration skills (design/allocate tasks, make/adjust plans, etc) that students may not have learned yet;

if they don’t know these skills at all, it’s impossible to let them practice. Necessary workshops or tutorials may be provided based on the Collaborative Problem Solving (CPS) model in [10]. Also, a progress monitoring tool may be embedded into or linked to the platform (eg. from Google Sheet), helping teammates clarify responsibilities and keep track of progress.

During the in-person Q&A sessions, teams can also discuss how to make plans or allocate tasks and

receive instant help from instructors. This saves students’ time from scheduling meetings outside classes, additional commute, and the possible bottlenecks in team discussions.

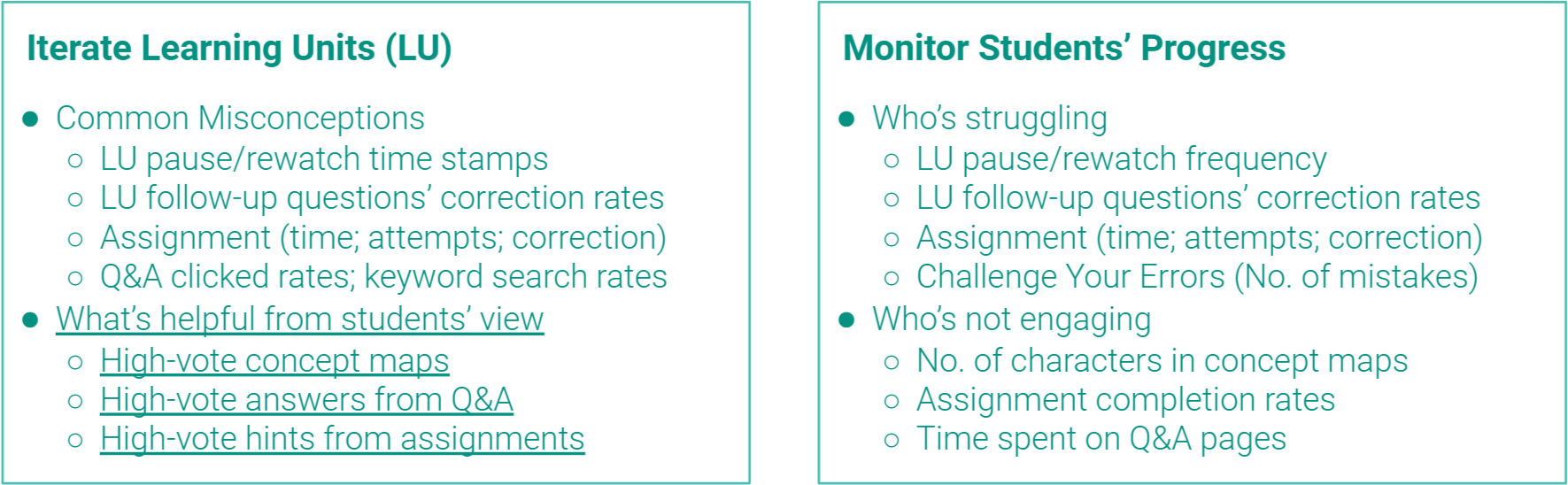

V. Reports for Teachers

After Dr. Ellis provided insights into what teachers want to know while teaching, I defined two main goals based on her points: 1) Iterate learning units to understand students’ struggles and provide better demonstration of materials 2) Monitor students’ progress to provide timely support or reminders as needed. Below are the prospective subgoals and metrics.

To verify whether these metrics can support the teachers’ goals, further experiments or literature reviews are needed. Besides conducting statistical tests on quantitative data, we can also apply qualitative analysis to the data such as “High-vote hints” and “High-vote answers,” which might provide abundant insights for teachers to better relate with students.

In addition,

UX research should be conducted to ensure that the data acquisition process happens as expected. For example, if we want to understand common points of student confusion in a video, we may look to “LU rewatch time stamps” data; however, if students click randomly through a video trying to find the point where a certain topic is discussed, the time stamp data could be noisy and uninformative. By adding a clear preview image when students hover on the progress bar, we can help students navigate effectively and gather more meaningful data.

Evaluation

Finally, I evaluated PeAce by answering two key questions: 1) PeAce integrates multiple collaboration modules into students’ learning process, but does this give students a much heavier workload? and 2) The “Inspiration Board” section listed students’ and teachers’ 26 needs/pain points. How well does PeAce satisfy these needs or resolve these pain points?

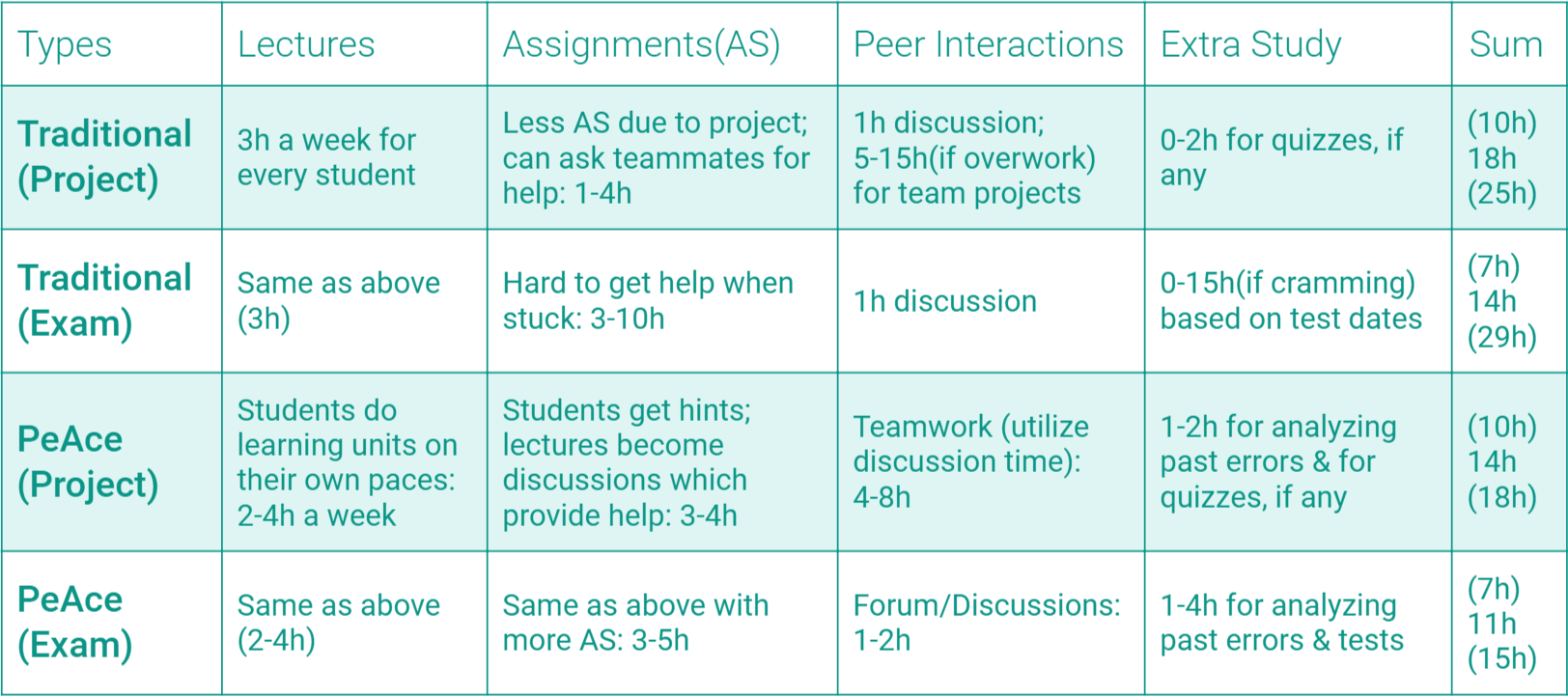

I. Students' Workload

At a glance, students may seem to have a huge workload in PeAce, but that’s not true on further inspection. The table below is a

comparison between the weekly workload of traditional STEM courses and the estimated workload of PeAce. I separate courses into two categories (Project & Exam), because a project-centered course usually has no major exams. The “Sum” column shows each type’s lower bound, average value, and upper bound of weekly workload.

The lower bound (usually the strongest students) for traditional courses and PeAce is the same for either project-centered or exam-centered types, but

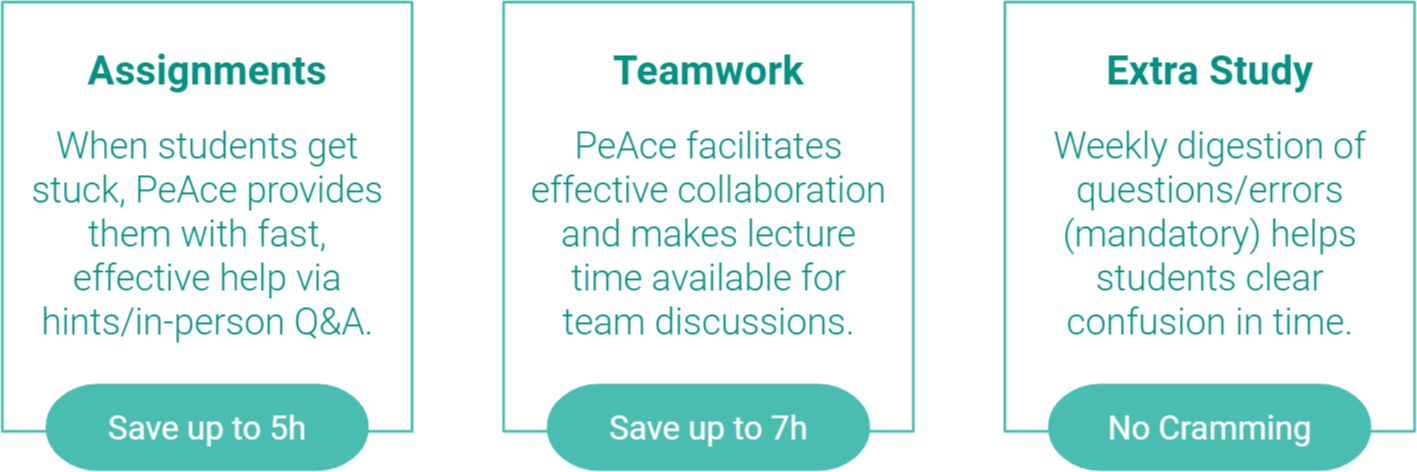

the average and upper bound workload of PeAce is significantly less than those of traditional courses. PeAce achieves this from the perspectives below:

II. Needs/Pains Review

The figures below summarized how PeAce performs with respect to the 26 needs/pain points. The

darker colors indicate the improvements of PeAce, while the gray cells indicate PeAce’s defects in comparison with the best existing solutions.

For individuals, PeAce can’t satisfy the 3rd need as well as existing platforms, mainly because of the constraints from a college setting. Students might not have clear career goals, and it’s hard to apply the robot from The Betty’s Brain system to general subjects. However,

PeAce provides motivation from social incentives.

For collaborators, PeAce outperforms many existing platforms. However, PeAce’s attempts to resolve the 2nd, 3rd, and 4th pain points do not have prior work upon which to build, so

their effectiveness needs further investigation.

For teachers, PeAce requires necessary interactions between teachers and students, which generates more workload than standardized courses without instructors. However, these interactions are a good fit for a college setting to

promote student-faculty relationships.

Overall, PeAce combines the merits of different platforms and makes considerable improvements, especially on the needs/pain points of

collaborators. PeAce’s integration of collaborative learning

reduces students’ workload by increasing the effectiveness of each peer interaction. Also, PeAce

strengthens students’ meta-cognitive skills with the practices of concept-map edition, peer instruction and error digestion.

Limitations & Vision

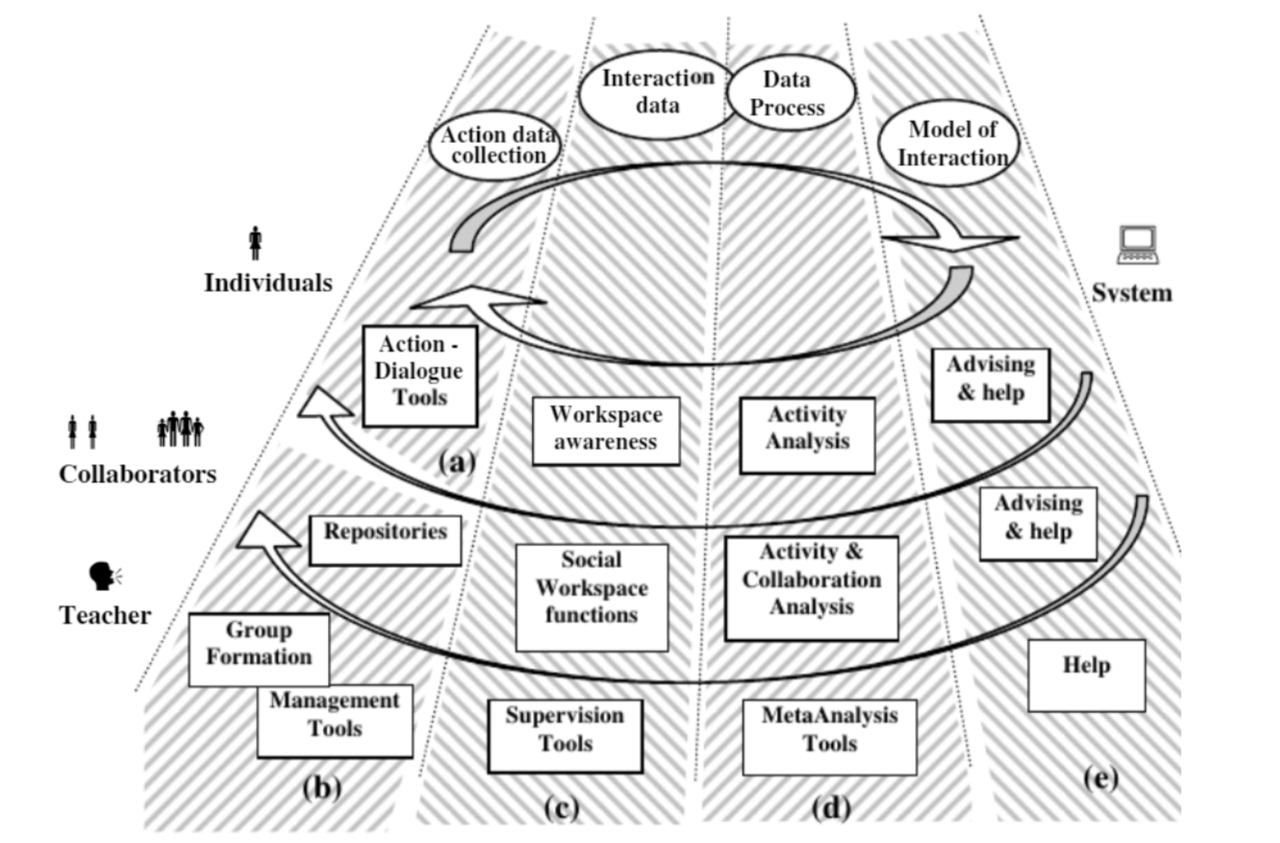

Due to the time constraint of an 8-week project, the number of publications and platforms being reviewed were limited. The framework is designed to be flexibly used under three types of situations as indicated below, but

this generality needs to be verified with a more concrete UX prototype. In addition, the future prototype should consider incentives for students and teachers to switch from existing platforms, and a sustainable business model should be designed.

Also, there’s a concern for the use of concept maps. The researchers of the Betty’s Brain system found that 45% of students couldn’t edit concept maps effectively due to a lack of motivation and some confusions about the interface [15]. Although PeAce modifies the UX design and provides incentives for students to edit the concept maps thoughtfully,

further research should be carried out to test if students behave as expected.

Currently, the proposed model has no technical implementation. Many features (Auto-grade questions, Q&A forum, etc) have been implemented in existing forums, while the three possible challenges lie in the implementation of concept maps, the hint filtering and recommendation algorithms, and the AI-generated questions for the “Challenge Your Errors” module. Also, the reports for teachers need further verification on the proposed metrics.

Although PeAce is a newly proposed system that still needs considerable future work, it conveys a unique vision.

PeAce aims to cultivate not just learners, but also collaborators. We hope that after using PeAce, students will develop skills to effectively help others learn, as well as a zeal to do so. We believe that the goal of learning is not to simply master materials, but to share and build upon each other’s knowledge, making this world a better place — a place with more understanding and peace (PeAce).

Acknowledgement & Ref.

Lots of thanks to Dr. Ellis for sharing insightful publications in addition to the ones I found, giving feedback on my progress and plan every week, brainstorming ideas with me from a teacher’s perspectives, proofreading my case study writing, and showing me directions when I feel uncertain or get stuck.

Lots of thanks to Dr. Kirsten Kung and the UC Scholars Program for all the support.

Lots of thanks to all the researchers, designers, and developers of existing educational platforms. All the information and inspiration they gave me was really precious in my diverge-and-converge, back-and-forth design process. Thanks to Favicon.io for the free icons.

References

[1] Conati, C. (2009). Intelligent Tutoring Systems: New Challenges and Directions. Retrieved July 09, 2020, from

https://www.aaai.org/ocs/index.php/IJCAI/IJCAI-09/paper/view/671/576

[2] Kumar A., Bharti V. (2020). Contribution of Learner Characteristics in the Development of Adaptive Learner Model. In: Choudhury S., Mishra R., Mishra R., Kumar A. (eds) Intelligent Communication, Control and Devices. Advances in Intelligent Systems and Computing, vol 989. Springer, Singapore

[3] Neil T. Heffernan. (1998). Intelligent tutoring systems have forgotten the tutor: adding a cognitive model of human tutors. In CHI 98 Conference Summary on Human Factors in Computing Systems (CHI ’98). Association for Computing Machinery, New York, NY, USA, 50–51. DOI: 10.1145/286498.286524

[4] Baba Kofi A. Weusijana, Christopher K. Riesbeck, and Joseph T. Walsh. (2004). Fostering reflection with Socratic tutoring software: results of using inquiry teaching strategies with web-based HCI techniques. In Proceedings of the 6th international conference on Learning sciences (ICLS ’04). International Society of the Learning Sciences, 561–567.

[5]

Squirrel AI IALS

[6] Paul A. Kirschner , John Sweller, and Richard E. Clark. (2006). Why Minimal Guidance During Instruction Does Not Work: An Analysis of the Failure of Constructivist, Discovery, Problem-Based, Experiential, and Inquiry-Based Teaching, Educational Psychologist, 41:2, 75-86, DOI: 10.1207/s15326985ep4102_1

[7] Leelawong, K., & Biswas, G. Designing Learning by Teaching Agents: The Betty's Brain System, International Journal of Artificial Intelligence in Education, vol. 18, no. 3, pp. 181-208, (2008).

[8] Geoffrey L. Herman and Sushmita Azad. (2020). A Comparison of Peer Instruction and Collaborative Problem Solving in a Computer Architecture Course. In Proceedings of the 51st ACM Technical Symposium on Computer Science Education (SIGCSE ’20). Association for Computing Machinery, New York, NY, USA, 461–467. DOI: 10.1145/3328778.3366819

[9] Tullis, J.G., Goldstone, R.L. (2020). Why does peer instruction benefit student learning?. Cogn. Research 5, 15. DOI: 10.1186/s41235-020-00218-5

[10] Sun, Chen et al. (2020). “Towards a Generalized Competency Model of Collaborative Problem Solving.” Computers & Education 143.C : n. pag. Web. DOI: 10.1016/j.compedu.2019.103672.

[11] Angelique Dimitracopoulou. (2005). Designing collaborative learning systems: current trends & future research agenda. In Proceedings of the 2005 conference on Computer support for collaborative learning: learning 2005: the next 10 years! (CSCL ’05). International Society of the Learning Sciences, 115–124.

[12]

Epic Guide to Stepik;

Access Statistics of Learners

[13]

Python Tutor Introduction Page;

Code Visualization Page

[14]

Codecademy Landing Page;

Codecademy Business Features;

Codecademy Forum

[15] Biswas, G., Segedy, J.R. & Bunchongchit, K. From Design to Implementation to Practice a Learning by Teaching System: Betty’s Brain. Int J Artif Intell Educ 26, 350–364 (2016). DOI: 10.1007/s40593-015-0057-9

[16]

Why Piazza Works

[17]

Carnegie Learning;

CL Promotion Video